mirror of

https://github.com/ynput/ayon-core.git

synced 2025-12-24 21:04:40 +01:00

Fix typos / cosmetics

This commit is contained in:

parent

41a2ee812a

commit

107e2e637e

111 changed files with 237 additions and 237 deletions

|

|

@ -157,7 +157,7 @@ class CollectAERender(abstract_collect_render.AbstractCollectRender):

|

|||

in url

|

||||

|

||||

Returns:

|

||||

(list) of absolut urls to rendered file

|

||||

(list) of absolute urls to rendered file

|

||||

"""

|

||||

start = render_instance.frameStart

|

||||

end = render_instance.frameEnd

|

||||

|

|

|

|||

|

|

@ -25,7 +25,7 @@ class SelectInvalidAction(pyblish.api.Action):

|

|||

invalid.extend(invalid_nodes)

|

||||

else:

|

||||

self.log.warning(

|

||||

"Failed plug-in doens't have any selectable objects."

|

||||

"Failed plug-in doesn't have any selectable objects."

|

||||

)

|

||||

|

||||

bpy.ops.object.select_all(action='DESELECT')

|

||||

|

|

|

|||

|

|

@ -9,7 +9,7 @@ import addon_utils

|

|||

def load_scripts(paths):

|

||||

"""Copy of `load_scripts` from Blender's implementation.

|

||||

|

||||

It is possible that whis function will be changed in future and usage will

|

||||

It is possible that this function will be changed in future and usage will

|

||||

be based on Blender version.

|

||||

"""

|

||||

import bpy_types

|

||||

|

|

|

|||

|

|

@ -21,7 +21,7 @@ class InstallPySideToBlender(PreLaunchHook):

|

|||

platforms = ["windows"]

|

||||

|

||||

def execute(self):

|

||||

# Prelaunch hook is not crutial

|

||||

# Prelaunch hook is not crucial

|

||||

try:

|

||||

self.inner_execute()

|

||||

except Exception:

|

||||

|

|

@ -156,7 +156,7 @@ class InstallPySideToBlender(PreLaunchHook):

|

|||

except pywintypes.error:

|

||||

pass

|

||||

|

||||

self.log.warning("Failed to instal PySide2 module to blender.")

|

||||

self.log.warning("Failed to install PySide2 module to blender.")

|

||||

|

||||

def is_pyside_installed(self, python_executable):

|

||||

"""Check if PySide2 module is in blender's pip list.

|

||||

|

|

|

|||

|

|

@ -22,7 +22,7 @@ class CreateAnimation(plugin.Creator):

|

|||

ops.execute_in_main_thread(mti)

|

||||

|

||||

def _process(self):

|

||||

# Get Instance Containter or create it if it does not exist

|

||||

# Get Instance Container or create it if it does not exist

|

||||

instances = bpy.data.collections.get(AVALON_INSTANCES)

|

||||

if not instances:

|

||||

instances = bpy.data.collections.new(name=AVALON_INSTANCES)

|

||||

|

|

|

|||

|

|

@ -22,7 +22,7 @@ class CreateCamera(plugin.Creator):

|

|||

ops.execute_in_main_thread(mti)

|

||||

|

||||

def _process(self):

|

||||

# Get Instance Containter or create it if it does not exist

|

||||

# Get Instance Container or create it if it does not exist

|

||||

instances = bpy.data.collections.get(AVALON_INSTANCES)

|

||||

if not instances:

|

||||

instances = bpy.data.collections.new(name=AVALON_INSTANCES)

|

||||

|

|

|

|||

|

|

@ -22,7 +22,7 @@ class CreateLayout(plugin.Creator):

|

|||

ops.execute_in_main_thread(mti)

|

||||

|

||||

def _process(self):

|

||||

# Get Instance Containter or create it if it does not exist

|

||||

# Get Instance Container or create it if it does not exist

|

||||

instances = bpy.data.collections.get(AVALON_INSTANCES)

|

||||

if not instances:

|

||||

instances = bpy.data.collections.new(name=AVALON_INSTANCES)

|

||||

|

|

|

|||

|

|

@ -22,7 +22,7 @@ class CreateModel(plugin.Creator):

|

|||

ops.execute_in_main_thread(mti)

|

||||

|

||||

def _process(self):

|

||||

# Get Instance Containter or create it if it does not exist

|

||||

# Get Instance Container or create it if it does not exist

|

||||

instances = bpy.data.collections.get(AVALON_INSTANCES)

|

||||

if not instances:

|

||||

instances = bpy.data.collections.new(name=AVALON_INSTANCES)

|

||||

|

|

|

|||

|

|

@ -22,7 +22,7 @@ class CreateRig(plugin.Creator):

|

|||

ops.execute_in_main_thread(mti)

|

||||

|

||||

def _process(self):

|

||||

# Get Instance Containter or create it if it does not exist

|

||||

# Get Instance Container or create it if it does not exist

|

||||

instances = bpy.data.collections.get(AVALON_INSTANCES)

|

||||

if not instances:

|

||||

instances = bpy.data.collections.new(name=AVALON_INSTANCES)

|

||||

|

|

|

|||

|

|

@ -14,7 +14,7 @@ class IncrementWorkfileVersion(pyblish.api.ContextPlugin):

|

|||

def process(self, context):

|

||||

|

||||

assert all(result["success"] for result in context.data["results"]), (

|

||||

"Publishing not succesfull so version is not increased.")

|

||||

"Publishing not successful so version is not increased.")

|

||||

|

||||

from openpype.lib import version_up

|

||||

path = context.data["currentFile"]

|

||||

|

|

|

|||

|

|

@ -32,7 +32,7 @@ class AppendCelactionAudio(pyblish.api.ContextPlugin):

|

|||

repr = next((r for r in reprs), None)

|

||||

if not repr:

|

||||

raise "Missing `audioMain` representation"

|

||||

self.log.info(f"represetation is: {repr}")

|

||||

self.log.info(f"representation is: {repr}")

|

||||

|

||||

audio_file = repr.get('data', {}).get('path', "")

|

||||

|

||||

|

|

@ -56,7 +56,7 @@ class AppendCelactionAudio(pyblish.api.ContextPlugin):

|

|||

representations (list): list for all representations

|

||||

|

||||

Returns:

|

||||

dict: subsets with version and representaions in keys

|

||||

dict: subsets with version and representations in keys

|

||||

"""

|

||||

|

||||

# Query all subsets for asset

|

||||

|

|

|

|||

|

|

@ -230,7 +230,7 @@ def maintain_current_timeline(to_timeline, from_timeline=None):

|

|||

project = get_current_project()

|

||||

working_timeline = from_timeline or project.GetCurrentTimeline()

|

||||

|

||||

# swith to the input timeline

|

||||

# switch to the input timeline

|

||||

project.SetCurrentTimeline(to_timeline)

|

||||

|

||||

try:

|

||||

|

|

|

|||

|

|

@ -40,7 +40,7 @@ class _FlameMenuApp(object):

|

|||

self.menu_group_name = menu_group_name

|

||||

self.dynamic_menu_data = {}

|

||||

|

||||

# flame module is only avaliable when a

|

||||

# flame module is only available when a

|

||||

# flame project is loaded and initialized

|

||||

self.flame = None

|

||||

try:

|

||||

|

|

|

|||

|

|

@ -37,7 +37,7 @@ class WireTapCom(object):

|

|||

|

||||

This way we are able to set new project with settings and

|

||||

correct colorspace policy. Also we are able to create new user

|

||||

or get actuall user with similar name (users are usually cloning

|

||||

or get actual user with similar name (users are usually cloning

|

||||

their profiles and adding date stamp into suffix).

|

||||

"""

|

||||

|

||||

|

|

@ -223,7 +223,7 @@ class WireTapCom(object):

|

|||

|

||||

volumes = []

|

||||

|

||||

# go trough all children and get volume names

|

||||

# go through all children and get volume names

|

||||

child_obj = WireTapNodeHandle()

|

||||

for child_idx in range(children_num):

|

||||

|

||||

|

|

@ -263,7 +263,7 @@ class WireTapCom(object):

|

|||

filtered_users = [user for user in used_names if user_name in user]

|

||||

|

||||

if filtered_users:

|

||||

# todo: need to find lastly created following regex patern for

|

||||

# todo: need to find lastly created following regex pattern for

|

||||

# date used in name

|

||||

return filtered_users.pop()

|

||||

|

||||

|

|

@ -308,7 +308,7 @@ class WireTapCom(object):

|

|||

|

||||

usernames = []

|

||||

|

||||

# go trough all children and get volume names

|

||||

# go through all children and get volume names

|

||||

child_obj = WireTapNodeHandle()

|

||||

for child_idx in range(children_num):

|

||||

|

||||

|

|

@ -355,7 +355,7 @@ class WireTapCom(object):

|

|||

if not requested:

|

||||

raise AttributeError((

|

||||

"Error: Cannot request number of "

|

||||

"childrens from the node {}. Make sure your "

|

||||

"children from the node {}. Make sure your "

|

||||

"wiretap service is running: {}").format(

|

||||

parent_path, parent.lastError())

|

||||

)

|

||||

|

|

|

|||

|

|

@ -234,7 +234,7 @@ class FtrackComponentCreator:

|

|||

).first()

|

||||

|

||||

if component_entity:

|

||||

# overwrite existing members in component enity

|

||||

# overwrite existing members in component entity

|

||||

# - get data for member from `ftrack.origin` location

|

||||

self._overwrite_members(component_entity, comp_data)

|

||||

|

||||

|

|

|

|||

|

|

@ -304,7 +304,7 @@ class FlameToFtrackPanel(object):

|

|||

self._resolve_project_entity()

|

||||

self._save_ui_state_to_cfg()

|

||||

|

||||

# get hanldes from gui input

|

||||

# get handles from gui input

|

||||

handles = self.handles_input.text()

|

||||

|

||||

# get frame start from gui input

|

||||

|

|

@ -517,7 +517,7 @@ class FlameToFtrackPanel(object):

|

|||

if self.temp_data_dir:

|

||||

shutil.rmtree(self.temp_data_dir)

|

||||

self.temp_data_dir = None

|

||||

print("All Temp data were destroied ...")

|

||||

print("All Temp data were destroyed ...")

|

||||

|

||||

def close(self):

|

||||

self._save_ui_state_to_cfg()

|

||||

|

|

|

|||

|

|

@ -16,7 +16,7 @@ def openpype_install():

|

|||

"""

|

||||

openpype.install()

|

||||

avalon.api.install(opflame)

|

||||

print("Avalon registred hosts: {}".format(

|

||||

print("Avalon registered hosts: {}".format(

|

||||

avalon.api.registered_host()))

|

||||

|

||||

|

||||

|

|

@ -100,7 +100,7 @@ def app_initialized(parent=None):

|

|||

"""

|

||||

Initialisation of the hook is starting from here

|

||||

|

||||

First it needs to test if it can import the flame modul.

|

||||

First it needs to test if it can import the flame module.

|

||||

This will happen only in case a project has been loaded.

|

||||

Then `app_initialized` will load main Framework which will load

|

||||

all menu objects as apps.

|

||||

|

|

|

|||

|

|

@ -65,7 +65,7 @@ def _sync_utility_scripts(env=None):

|

|||

if _itm not in remove_black_list:

|

||||

skip = True

|

||||

|

||||

# do not skyp if pyc in extension

|

||||

# do not skip if pyc in extension

|

||||

if not os.path.isdir(_itm) and "pyc" in os.path.splitext(_itm)[-1]:

|

||||

skip = False

|

||||

|

||||

|

|

|

|||

|

|

@ -13,7 +13,7 @@ from pprint import pformat

|

|||

class FlamePrelaunch(PreLaunchHook):

|

||||

""" Flame prelaunch hook

|

||||

|

||||

Will make sure flame_script_dirs are coppied to user's folder defined

|

||||

Will make sure flame_script_dirs are copied to user's folder defined

|

||||

in environment var FLAME_SCRIPT_DIR.

|

||||

"""

|

||||

app_groups = ["flame"]

|

||||

|

|

|

|||

|

|

@ -127,7 +127,7 @@ def create_time_effects(otio_clip, item):

|

|||

# # add otio effect to clip effects

|

||||

# otio_clip.effects.append(otio_effect)

|

||||

|

||||

# # loop trought and get all Timewarps

|

||||

# # loop through and get all Timewarps

|

||||

# for effect in subTrackItems:

|

||||

# if ((track_item not in effect.linkedItems())

|

||||

# and (len(effect.linkedItems()) > 0)):

|

||||

|

|

@ -615,11 +615,11 @@ def create_otio_timeline(sequence):

|

|||

# Add Gap if needed

|

||||

if itemindex == 0:

|

||||

# if it is first track item at track then add

|

||||

# it to previouse item

|

||||

# it to previous item

|

||||

prev_item = segment_data

|

||||

|

||||

else:

|

||||

# get previouse item

|

||||

# get previous item

|

||||

prev_item = segments_ordered[itemindex - 1]

|

||||

|

||||

log.debug("_ segment_data: {}".format(segment_data))

|

||||

|

|

|

|||

|

|

@ -52,7 +52,7 @@ def install():

|

|||

|

||||

|

||||

def uninstall():

|

||||

"""Uninstall all tha was installed

|

||||

"""Uninstall all that was installed

|

||||

|

||||

This is where you undo everything that was done in `install()`.

|

||||

That means, removing menus, deregistering families and data

|

||||

|

|

|

|||

|

|

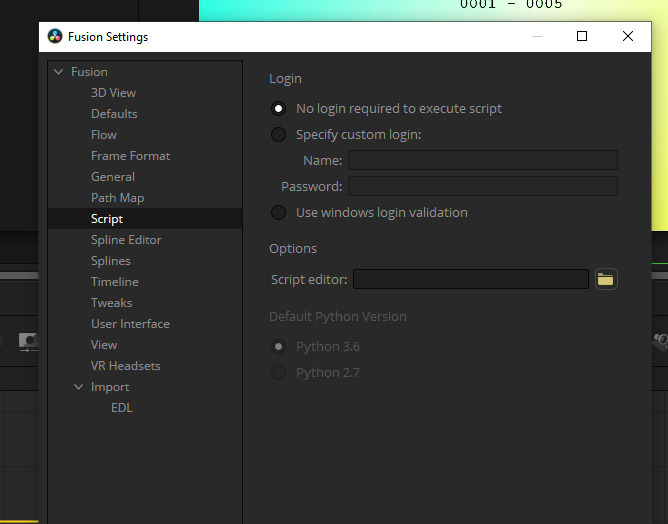

@ -12,7 +12,7 @@ class FusionPrelaunch(PreLaunchHook):

|

|||

app_groups = ["fusion"]

|

||||

|

||||

def execute(self):

|

||||

# making sure pyton 3.6 is installed at provided path

|

||||

# making sure python 3.6 is installed at provided path

|

||||

py36_dir = os.path.normpath(self.launch_context.env.get("PYTHON36", ""))

|

||||

assert os.path.isdir(py36_dir), (

|

||||

"Python 3.6 is not installed at the provided folder path. Either "

|

||||

|

|

|

|||

|

|

@ -185,22 +185,22 @@ class FusionLoadSequence(api.Loader):

|

|||

- We do the same like Fusion - allow fusion to take control.

|

||||

|

||||

- HoldFirstFrame: Fusion resets this to 0

|

||||

- We preverse the value.

|

||||

- We preserve the value.

|

||||

|

||||

- HoldLastFrame: Fusion resets this to 0

|

||||

- We preverse the value.

|

||||

- We preserve the value.

|

||||

|

||||

- Reverse: Fusion resets to disabled if "Loop" is not enabled.

|

||||

- We preserve the value.

|

||||

|

||||

- Depth: Fusion resets to "Format"

|

||||

- We preverse the value.

|

||||

- We preserve the value.

|

||||

|

||||

- KeyCode: Fusion resets to ""

|

||||

- We preverse the value.

|

||||

- We preserve the value.

|

||||

|

||||

- TimeCodeOffset: Fusion resets to 0

|

||||

- We preverse the value.

|

||||

- We preserve the value.

|

||||

|

||||

"""

|

||||

|

||||

|

|

|

|||

|

|

@ -124,7 +124,7 @@ class FusionSubmitDeadline(pyblish.api.InstancePlugin):

|

|||

|

||||

# Include critical variables with submission

|

||||

keys = [

|

||||

# TODO: This won't work if the slaves don't have accesss to

|

||||

# TODO: This won't work if the slaves don't have access to

|

||||

# these paths, such as if slaves are running Linux and the

|

||||

# submitter is on Windows.

|

||||

"PYTHONPATH",

|

||||

|

|

|

|||

|

|

@ -85,7 +85,7 @@ def _format_filepath(session):

|

|||

new_filename = "{}_{}_slapcomp_v001.comp".format(project, asset)

|

||||

new_filepath = os.path.join(slapcomp_dir, new_filename)

|

||||

|

||||

# Create new unqiue filepath

|

||||

# Create new unique filepath

|

||||

if os.path.exists(new_filepath):

|

||||

new_filepath = pype.version_up(new_filepath)

|

||||

|

||||

|

|

|

|||

|

|

@ -16,7 +16,7 @@ def main(env):

|

|||

# activate resolve from pype

|

||||

avalon.api.install(avalon.fusion)

|

||||

|

||||

log.info(f"Avalon registred hosts: {avalon.api.registered_host()}")

|

||||

log.info(f"Avalon registered hosts: {avalon.api.registered_host()}")

|

||||

|

||||

menu.launch_openpype_menu()

|

||||

|

||||

|

|

|

|||

|

|

@ -2,13 +2,13 @@

|

|||

|

||||

### Development

|

||||

|

||||

#### Setting up ESLint as linter for javasript code

|

||||

#### Setting up ESLint as linter for javascript code

|

||||

|

||||

You nee [node.js](https://nodejs.org/en/) installed. All you need to do then

|

||||

is to run:

|

||||

|

||||

```sh

|

||||

npm intall

|

||||

npm install

|

||||

```

|

||||

in **js** directory. This will install eslint and all requirements locally.

|

||||

|

||||

|

|

|

|||

|

|

@ -18,11 +18,11 @@ if (typeof $ === 'undefined'){

|

|||

* @classdesc Image Sequence loader JS code.

|

||||

*/

|

||||

var ImageSequenceLoader = function() {

|

||||

this.PNGTransparencyMode = 0; // Premultiplied wih Black

|

||||

this.TGATransparencyMode = 0; // Premultiplied wih Black

|

||||

this.SGITransparencyMode = 0; // Premultiplied wih Black

|

||||

this.PNGTransparencyMode = 0; // Premultiplied with Black

|

||||

this.TGATransparencyMode = 0; // Premultiplied with Black

|

||||

this.SGITransparencyMode = 0; // Premultiplied with Black

|

||||

this.LayeredPSDTransparencyMode = 1; // Straight

|

||||

this.FlatPSDTransparencyMode = 2; // Premultiplied wih White

|

||||

this.FlatPSDTransparencyMode = 2; // Premultiplied with White

|

||||

};

|

||||

|

||||

|

||||

|

|

@ -84,7 +84,7 @@ ImageSequenceLoader.getUniqueColumnName = function(columnPrefix) {

|

|||

* @return {string} Read node name

|

||||

*

|

||||

* @example

|

||||

* // Agrguments are in following order:

|

||||

* // Arguments are in following order:

|

||||

* var args = [

|

||||

* files, // Files in file sequences.

|

||||

* asset, // Asset name.

|

||||

|

|

@ -97,11 +97,11 @@ ImageSequenceLoader.prototype.importFiles = function(args) {

|

|||

MessageLog.trace("ImageSequence:: " + typeof PypeHarmony);

|

||||

MessageLog.trace("ImageSequence $:: " + typeof $);

|

||||

MessageLog.trace("ImageSequence OH:: " + typeof PypeHarmony.OpenHarmony);

|

||||

var PNGTransparencyMode = 0; // Premultiplied wih Black

|

||||

var TGATransparencyMode = 0; // Premultiplied wih Black

|

||||

var SGITransparencyMode = 0; // Premultiplied wih Black

|

||||

var PNGTransparencyMode = 0; // Premultiplied with Black

|

||||

var TGATransparencyMode = 0; // Premultiplied with Black

|

||||

var SGITransparencyMode = 0; // Premultiplied with Black

|

||||

var LayeredPSDTransparencyMode = 1; // Straight

|

||||

var FlatPSDTransparencyMode = 2; // Premultiplied wih White

|

||||

var FlatPSDTransparencyMode = 2; // Premultiplied with White

|

||||

|

||||

var doc = $.scn;

|

||||

var files = args[0];

|

||||

|

|

@ -224,7 +224,7 @@ ImageSequenceLoader.prototype.importFiles = function(args) {

|

|||

* @return {string} Read node name

|

||||

*

|

||||

* @example

|

||||

* // Agrguments are in following order:

|

||||

* // Arguments are in following order:

|

||||

* var args = [

|

||||

* files, // Files in file sequences

|

||||

* name, // Node name

|

||||

|

|

|

|||

|

|

@ -13,11 +13,11 @@ copy_files = """function copyFile(srcFilename, dstFilename)

|

|||

}

|

||||

"""

|

||||

|

||||

import_files = """var PNGTransparencyMode = 1; //Premultiplied wih Black

|

||||

var TGATransparencyMode = 0; //Premultiplied wih Black

|

||||

var SGITransparencyMode = 0; //Premultiplied wih Black

|

||||

import_files = """var PNGTransparencyMode = 1; //Premultiplied with Black

|

||||

var TGATransparencyMode = 0; //Premultiplied with Black

|

||||

var SGITransparencyMode = 0; //Premultiplied with Black

|

||||

var LayeredPSDTransparencyMode = 1; //Straight

|

||||

var FlatPSDTransparencyMode = 2; //Premultiplied wih White

|

||||

var FlatPSDTransparencyMode = 2; //Premultiplied with White

|

||||

|

||||

function getUniqueColumnName( column_prefix )

|

||||

{

|

||||

|

|

@ -140,11 +140,11 @@ function import_files(args)

|

|||

import_files

|

||||

"""

|

||||

|

||||

replace_files = """var PNGTransparencyMode = 1; //Premultiplied wih Black

|

||||

var TGATransparencyMode = 0; //Premultiplied wih Black

|

||||

var SGITransparencyMode = 0; //Premultiplied wih Black

|

||||

replace_files = """var PNGTransparencyMode = 1; //Premultiplied with Black

|

||||

var TGATransparencyMode = 0; //Premultiplied with Black

|

||||

var SGITransparencyMode = 0; //Premultiplied with Black

|

||||

var LayeredPSDTransparencyMode = 1; //Straight

|

||||

var FlatPSDTransparencyMode = 2; //Premultiplied wih White

|

||||

var FlatPSDTransparencyMode = 2; //Premultiplied with White

|

||||

|

||||

function replace_files(args)

|

||||

{

|

||||

|

|

|

|||

|

|

@ -31,7 +31,7 @@ def beforeNewProjectCreated(event):

|

|||

|

||||

def afterNewProjectCreated(event):

|

||||

log.info("after new project created event...")

|

||||

# sync avalon data to project properities

|

||||

# sync avalon data to project properties

|

||||

sync_avalon_data_to_workfile()

|

||||

|

||||

# add tags from preset

|

||||

|

|

@ -51,7 +51,7 @@ def beforeProjectLoad(event):

|

|||

|

||||

def afterProjectLoad(event):

|

||||

log.info("after project load event...")

|

||||

# sync avalon data to project properities

|

||||

# sync avalon data to project properties

|

||||

sync_avalon_data_to_workfile()

|

||||

|

||||

# add tags from preset

|

||||

|

|

|

|||

|

|

@ -299,7 +299,7 @@ def get_track_item_pype_data(track_item):

|

|||

if not tag:

|

||||

return None

|

||||

|

||||

# get tag metadata attribut

|

||||

# get tag metadata attribute

|

||||

tag_data = tag.metadata()

|

||||

# convert tag metadata to normal keys names and values to correct types

|

||||

for k, v in dict(tag_data).items():

|

||||

|

|

@ -402,7 +402,7 @@ def sync_avalon_data_to_workfile():

|

|||

try:

|

||||

project.setProjectDirectory(active_project_root)

|

||||

except Exception:

|

||||

# old way of seting it

|

||||

# old way of setting it

|

||||

project.setProjectRoot(active_project_root)

|

||||

|

||||

# get project data from avalon db

|

||||

|

|

@ -614,7 +614,7 @@ def create_nuke_workfile_clips(nuke_workfiles, seq=None):

|

|||

if not seq:

|

||||

seq = hiero.core.Sequence('NewSequences')

|

||||

root.addItem(hiero.core.BinItem(seq))

|

||||

# todo will ned to define this better

|

||||

# todo will need to define this better

|

||||

# track = seq[1] # lazy example to get a destination# track

|

||||

clips_lst = []

|

||||

for nk in nuke_workfiles:

|

||||

|

|

@ -838,7 +838,7 @@ def apply_colorspace_project():

|

|||

# remove the TEMP file as we dont need it

|

||||

os.remove(copy_current_file_tmp)

|

||||

|

||||

# use the code from bellow for changing xml hrox Attributes

|

||||

# use the code from below for changing xml hrox Attributes

|

||||

presets.update({"name": os.path.basename(copy_current_file)})

|

||||

|

||||

# read HROX in as QDomSocument

|

||||

|

|

@ -874,7 +874,7 @@ def apply_colorspace_clips():

|

|||

if "default" in clip_colorspace:

|

||||

continue

|

||||

|

||||

# check if any colorspace presets for read is mathing

|

||||

# check if any colorspace presets for read is matching

|

||||

preset_clrsp = None

|

||||

for k in presets:

|

||||

if not bool(re.search(k["regex"], clip_media_source_path)):

|

||||

|

|

@ -931,7 +931,7 @@ def get_sequence_pattern_and_padding(file):

|

|||

Can find file.0001.ext, file.%02d.ext, file.####.ext

|

||||

|

||||

Return:

|

||||

string: any matching sequence patern

|

||||

string: any matching sequence pattern

|

||||

int: padding of sequnce numbering

|

||||

"""

|

||||

foundall = re.findall(

|

||||

|

|

@ -950,7 +950,7 @@ def get_sequence_pattern_and_padding(file):

|

|||

|

||||

|

||||

def sync_clip_name_to_data_asset(track_items_list):

|

||||

# loop trough all selected clips

|

||||

# loop through all selected clips

|

||||

for track_item in track_items_list:

|

||||

# ignore if parent track is locked or disabled

|

||||

if track_item.parent().isLocked():

|

||||

|

|

|

|||

|

|

@ -92,7 +92,7 @@ def create_time_effects(otio_clip, track_item):

|

|||

# add otio effect to clip effects

|

||||

otio_clip.effects.append(otio_effect)

|

||||

|

||||

# loop trought and get all Timewarps

|

||||

# loop through and get all Timewarps

|

||||

for effect in subTrackItems:

|

||||

if ((track_item not in effect.linkedItems())

|

||||

and (len(effect.linkedItems()) > 0)):

|

||||

|

|

@ -388,11 +388,11 @@ def create_otio_timeline():

|

|||

# Add Gap if needed

|

||||

if itemindex == 0:

|

||||

# if it is first track item at track then add

|

||||

# it to previouse item

|

||||

# it to previous item

|

||||

return track_item

|

||||

|

||||

else:

|

||||

# get previouse item

|

||||

# get previous item

|

||||

return track_item.parent().items()[itemindex - 1]

|

||||

|

||||

# get current timeline

|

||||

|

|

@ -416,11 +416,11 @@ def create_otio_timeline():

|

|||

# Add Gap if needed

|

||||

if itemindex == 0:

|

||||

# if it is first track item at track then add

|

||||

# it to previouse item

|

||||

# it to previous item

|

||||

prev_item = track_item

|

||||

|

||||

else:

|

||||

# get previouse item

|

||||

# get previous item

|

||||

prev_item = track_item.parent().items()[itemindex - 1]

|

||||

|

||||

# calculate clip frame range difference from each other

|

||||

|

|

|

|||

|

|

@ -146,7 +146,7 @@ class CreatorWidget(QtWidgets.QDialog):

|

|||

# convert label text to normal capitalized text with spaces

|

||||

label_text = self.camel_case_split(text)

|

||||

|

||||

# assign the new text to lable widget

|

||||

# assign the new text to label widget

|

||||

label = QtWidgets.QLabel(label_text)

|

||||

label.setObjectName("LineLabel")

|

||||

|

||||

|

|

@ -337,7 +337,7 @@ class SequenceLoader(avalon.Loader):

|

|||

"Sequentially in order"

|

||||

],

|

||||

default="Original timing",

|

||||

help="Would you like to place it at orignal timing?"

|

||||

help="Would you like to place it at original timing?"

|

||||

)

|

||||

]

|

||||

|

||||

|

|

@ -475,7 +475,7 @@ class ClipLoader:

|

|||

def _get_asset_data(self):

|

||||

""" Get all available asset data

|

||||

|

||||

joint `data` key with asset.data dict into the representaion

|

||||

joint `data` key with asset.data dict into the representation

|

||||

|

||||

"""

|

||||

asset_name = self.context["representation"]["context"]["asset"]

|

||||

|

|

@ -550,7 +550,7 @@ class ClipLoader:

|

|||

(self.timeline_out - self.timeline_in + 1)

|

||||

+ self.handle_start + self.handle_end) < self.media_duration)

|

||||

|

||||

# if slate is on then remove the slate frame from begining

|

||||

# if slate is on then remove the slate frame from beginning

|

||||

if slate_on:

|

||||

self.media_duration -= 1

|

||||

self.handle_start += 1

|

||||

|

|

@ -634,8 +634,8 @@ class PublishClip:

|

|||

"track": "sequence",

|

||||

}

|

||||

|

||||

# parents search patern

|

||||

parents_search_patern = r"\{([a-z]*?)\}"

|

||||

# parents search pattern

|

||||

parents_search_pattern = r"\{([a-z]*?)\}"

|

||||

|

||||

# default templates for non-ui use

|

||||

rename_default = False

|

||||

|

|

@ -719,7 +719,7 @@ class PublishClip:

|

|||

return self.track_item

|

||||

|

||||

def _populate_track_item_default_data(self):

|

||||

""" Populate default formating data from track item. """

|

||||

""" Populate default formatting data from track item. """

|

||||

|

||||

self.track_item_default_data = {

|

||||

"_folder_": "shots",

|

||||

|

|

@ -814,7 +814,7 @@ class PublishClip:

|

|||

# mark review layer

|

||||

if self.review_track and (

|

||||

self.review_track not in self.review_track_default):

|

||||

# if review layer is defined and not the same as defalut

|

||||

# if review layer is defined and not the same as default

|

||||

self.review_layer = self.review_track

|

||||

# shot num calculate

|

||||

if self.rename_index == 0:

|

||||

|

|

@ -863,7 +863,7 @@ class PublishClip:

|

|||

# in case track name and subset name is the same then add

|

||||

if self.subset_name == self.track_name:

|

||||

hero_data["subset"] = self.subset

|

||||

# assing data to return hierarchy data to tag

|

||||

# assign data to return hierarchy data to tag

|

||||

tag_hierarchy_data = hero_data

|

||||

|

||||

# add data to return data dict

|

||||

|

|

@ -897,7 +897,7 @@ class PublishClip:

|

|||

type

|

||||

)

|

||||

|

||||

# first collect formating data to use for formating template

|

||||

# first collect formatting data to use for formatting template

|

||||

formating_data = {}

|

||||

for _k, _v in self.hierarchy_data.items():

|

||||

value = _v["value"].format(

|

||||

|

|

@ -915,9 +915,9 @@ class PublishClip:

|

|||

""" Create parents and return it in list. """

|

||||

self.parents = []

|

||||

|

||||

patern = re.compile(self.parents_search_patern)

|

||||

pattern = re.compile(self.parents_search_pattern)

|

||||

|

||||

par_split = [(patern.findall(t).pop(), t)

|

||||

par_split = [(pattern.findall(t).pop(), t)

|

||||

for t in self.hierarchy.split("/")]

|

||||

|

||||

for type, template in par_split:

|

||||

|

|

|

|||

|

|

@ -1,5 +1,5 @@

|

|||

# PimpMySpreadsheet 1.0, Antony Nasce, 23/05/13.

|

||||

# Adds custom spreadsheet columns and right-click menu for setting the Shot Status, and Artist Shot Assignement.

|

||||

# Adds custom spreadsheet columns and right-click menu for setting the Shot Status, and Artist Shot Assignment.

|

||||

# gStatusTags is a global dictionary of key(status)-value(icon) pairs, which can be overridden with custom icons if required

|

||||

# Requires Hiero 1.7v2 or later.

|

||||

# Install Instructions: Copy to ~/.hiero/Python/StartupUI

|

||||

|

|

|

|||

|

|

@ -172,7 +172,7 @@ def add_tags_to_workfile():

|

|||

}

|

||||

}

|

||||

|

||||

# loop trough tag data dict and create deep tag structure

|

||||

# loop through tag data dict and create deep tag structure

|

||||

for _k, _val in nks_pres_tags.items():

|

||||

# check if key is not decorated with [] so it is defined as bin

|

||||

bin_find = None

|

||||

|

|

|

|||

|

|

@ -139,7 +139,7 @@ class CreateShotClip(phiero.Creator):

|

|||

"type": "QComboBox",

|

||||

"label": "Subset Name",

|

||||

"target": "ui",

|

||||

"toolTip": "chose subset name patern, if <track_name> is selected, name of track layer will be used", # noqa

|

||||

"toolTip": "chose subset name pattern, if <track_name> is selected, name of track layer will be used", # noqa

|

||||

"order": 0},

|

||||

"subsetFamily": {

|

||||

"value": ["plate", "take"],

|

||||

|

|

|

|||

|

|

@ -34,7 +34,7 @@ class PreCollectClipEffects(pyblish.api.InstancePlugin):

|

|||

if clip_effect_items:

|

||||

tracks_effect_items[track_index] = clip_effect_items

|

||||

|

||||

# process all effects and devide them to instance

|

||||

# process all effects and divide them to instance

|

||||

for _track_index, sub_track_items in tracks_effect_items.items():

|

||||

# skip if track index is the same as review track index

|

||||

if review and review_track_index == _track_index:

|

||||

|

|

@ -156,7 +156,7 @@ class PreCollectClipEffects(pyblish.api.InstancePlugin):

|

|||

'postage_stamp_frame', 'maskChannel', 'export_cc',

|

||||

'select_cccid', 'mix', 'version', 'matrix']

|

||||

|

||||

# loop trough all knobs and collect not ignored

|

||||

# loop through all knobs and collect not ignored

|

||||

# and any with any value

|

||||

for knob in node.knobs().keys():

|

||||

# skip nodes in ignore keys

|

||||

|

|

|

|||

|

|

@ -264,7 +264,7 @@ class PrecollectInstances(pyblish.api.ContextPlugin):

|

|||

timeline_range = self.create_otio_time_range_from_timeline_item_data(

|

||||

track_item)

|

||||

|

||||

# loop trough audio track items and search for overlaping clip

|

||||

# loop through audio track items and search for overlapping clip

|

||||

for otio_audio in self.audio_track_items:

|

||||

parent_range = otio_audio.range_in_parent()

|

||||

|

||||

|

|

|

|||

|

|

@ -5,7 +5,7 @@ class CollectClipResolution(pyblish.api.InstancePlugin):

|

|||

"""Collect clip geometry resolution"""

|

||||

|

||||

order = pyblish.api.CollectorOrder - 0.1

|

||||

label = "Collect Clip Resoluton"

|

||||

label = "Collect Clip Resolution"

|

||||

hosts = ["hiero"]

|

||||

families = ["clip"]

|

||||

|

||||

|

|

|

|||

|

|

@ -52,7 +52,7 @@ class PrecollectRetime(api.InstancePlugin):

|

|||

handle_end

|

||||

))

|

||||

|

||||

# loop withing subtrack items

|

||||

# loop within subtrack items

|

||||

time_warp_nodes = []

|

||||

source_in_change = 0

|

||||

source_out_change = 0

|

||||

|

|

@ -76,7 +76,7 @@ class PrecollectRetime(api.InstancePlugin):

|

|||

(timeline_in - handle_start),

|

||||

(timeline_out + handle_end) + 1)

|

||||

]

|

||||

# calculate differnce

|

||||

# calculate difference

|

||||

diff_in = (node["lookup"].getValueAt(

|

||||

timeline_in)) - timeline_in

|

||||

diff_out = (node["lookup"].getValueAt(

|

||||

|

|

|

|||

|

|

@ -184,7 +184,7 @@ def uv_from_element(element):

|

|||

parent = element.split(".", 1)[0]

|

||||

|

||||

# Maya is funny in that when the transform of the shape

|

||||

# of the component elemen has children, the name returned

|

||||

# of the component element has children, the name returned

|

||||

# by that elementection is the shape. Otherwise, it is

|

||||

# the transform. So lets see what type we're dealing with here.

|

||||

if cmds.nodeType(parent) in supported:

|

||||

|

|

@ -1595,7 +1595,7 @@ def get_container_transforms(container, members=None, root=False):

|

|||

Args:

|

||||

container (dict): the container

|

||||

members (list): optional and convenience argument

|

||||

root (bool): return highest node in hierachy if True

|

||||

root (bool): return highest node in hierarchy if True

|

||||

|

||||

Returns:

|

||||

root (list / str):

|

||||

|

|

@ -2482,7 +2482,7 @@ class shelf():

|

|||

def _get_render_instances():

|

||||

"""Return all 'render-like' instances.

|

||||

|

||||

This returns list of instance sets that needs to receive informations

|

||||

This returns list of instance sets that needs to receive information

|

||||

about render layer changes.

|

||||

|

||||

Returns:

|

||||

|

|

|

|||

|

|

@ -506,8 +506,8 @@

|

|||

"transforms",

|

||||

"local"

|

||||

],

|

||||

"title": "# Copy Local Transfroms",

|

||||

"tooltip": "Copy local transfroms"

|

||||

"title": "# Copy Local Transforms",

|

||||

"tooltip": "Copy local transforms"

|

||||

},

|

||||

{

|

||||

"type": "action",

|

||||

|

|

@ -520,8 +520,8 @@

|

|||

"transforms",

|

||||

"matrix"

|

||||

],

|

||||

"title": "# Copy Matrix Transfroms",

|

||||

"tooltip": "Copy Matrix transfroms"

|

||||

"title": "# Copy Matrix Transforms",

|

||||

"tooltip": "Copy Matrix transforms"

|

||||

},

|

||||

{

|

||||

"type": "action",

|

||||

|

|

@ -842,7 +842,7 @@

|

|||

"sourcetype": "file",

|

||||

"tags": ["cleanup", "remove_user_defined_attributes"],

|

||||

"title": "# Remove User Defined Attributes",

|

||||

"tooltip": "Remove all user-defined attributs from all nodes"

|

||||

"tooltip": "Remove all user-defined attributes from all nodes"

|

||||

},

|

||||

{

|

||||

"type": "action",

|

||||

|

|

|

|||

|

|

@ -794,8 +794,8 @@

|

|||

"transforms",

|

||||

"local"

|

||||

],

|

||||

"title": "Copy Local Transfroms",

|

||||

"tooltip": "Copy local transfroms"

|

||||

"title": "Copy Local Transforms",

|

||||

"tooltip": "Copy local transforms"

|

||||

},

|

||||

{

|

||||

"type": "action",

|

||||

|

|

@ -808,8 +808,8 @@

|

|||

"transforms",

|

||||

"matrix"

|

||||

],

|

||||

"title": "Copy Matrix Transfroms",

|

||||

"tooltip": "Copy Matrix transfroms"

|

||||

"title": "Copy Matrix Transforms",

|

||||

"tooltip": "Copy Matrix transforms"

|

||||

},

|

||||

{

|

||||

"type": "action",

|

||||

|

|

@ -1274,7 +1274,7 @@

|

|||

"sourcetype": "file",

|

||||

"tags": ["cleanup", "remove_user_defined_attributes"],

|

||||

"title": "Remove User Defined Attributes",

|

||||

"tooltip": "Remove all user-defined attributs from all nodes"

|

||||

"tooltip": "Remove all user-defined attributes from all nodes"

|

||||

},

|

||||

{

|

||||

"type": "action",

|

||||

|

|

|

|||

|

|

@ -341,7 +341,7 @@ def update_package(set_container, representation):

|

|||

def update_scene(set_container, containers, current_data, new_data, new_file):

|

||||

"""Updates the hierarchy, assets and their matrix

|

||||

|

||||

Updates the following withing the scene:

|

||||

Updates the following within the scene:

|

||||

* Setdress hierarchy alembic

|

||||

* Matrix

|

||||

* Parenting

|

||||

|

|

|

|||

|

|

@ -92,7 +92,7 @@ class ShaderDefinitionsEditor(QtWidgets.QWidget):

|

|||

def _write_definition_file(self, content, force=False):

|

||||

"""Write content as definition to file in database.

|

||||

|

||||

Before file is writen, check is made if its content has not

|

||||

Before file is written, check is made if its content has not

|

||||

changed. If is changed, warning is issued to user if he wants

|

||||

it to overwrite. Note: GridFs doesn't allow changing file content.

|

||||

You need to delete existing file and create new one.

|

||||

|

|

|

|||

|

|

@ -53,8 +53,8 @@ class CreateRender(plugin.Creator):

|

|||

renderer.

|

||||

ass (bool): Submit as ``ass`` file for standalone Arnold renderer.

|

||||

tileRendering (bool): Instance is set to tile rendering mode. We

|

||||

won't submit actuall render, but we'll make publish job to wait

|

||||

for Tile Assemly job done and then publish.

|

||||

won't submit actual render, but we'll make publish job to wait

|

||||

for Tile Assembly job done and then publish.

|

||||

|

||||

See Also:

|

||||

https://pype.club/docs/artist_hosts_maya#creating-basic-render-setup

|

||||

|

|

|

|||

|

|

@ -24,7 +24,7 @@ class CollectAssembly(pyblish.api.InstancePlugin):

|

|||

"""

|

||||

|

||||

order = pyblish.api.CollectorOrder + 0.49

|

||||

label = "Assemby"

|

||||

label = "Assembly"

|

||||

families = ["assembly"]

|

||||

|

||||

def process(self, instance):

|

||||

|

|

|

|||

|

|

@ -126,7 +126,7 @@ class CollectMayaRender(pyblish.api.ContextPlugin):

|

|||

r"^.+:(.*)", layer).group(1)

|

||||

except IndexError:

|

||||

msg = "Invalid layer name in set [ {} ]".format(layer)

|

||||

self.log.warnig(msg)

|

||||

self.log.warning(msg)

|

||||

continue

|

||||

|

||||

self.log.info("processing %s" % layer)

|

||||

|

|

|

|||

|

|

@ -48,7 +48,7 @@ class CollectVrayScene(pyblish.api.InstancePlugin):

|

|||

expected_layer_name = re.search(r"^.+:(.*)", layer).group(1)

|

||||

except IndexError:

|

||||

msg = "Invalid layer name in set [ {} ]".format(layer)

|

||||

self.log.warnig(msg)

|

||||

self.log.warning(msg)

|

||||

continue

|

||||

|

||||

self.log.info("processing %s" % layer)

|

||||

|

|

|

|||

|

|

@ -36,7 +36,7 @@ class ExtractVrayscene(openpype.api.Extractor):

|

|||

else:

|

||||

node = vray_settings[0]

|

||||

|

||||

# setMembers on vrayscene_layer shoudl contain layer name.

|

||||

# setMembers on vrayscene_layer should contain layer name.

|

||||

layer_name = instance.data.get("layer")

|

||||

|

||||

staging_dir = self.staging_dir(instance)

|

||||

|

|

@ -111,7 +111,7 @@ class ExtractVrayscene(openpype.api.Extractor):

|

|||

layer (str): layer name.

|

||||

template (str): token template.

|

||||

start_frame (int, optional): start frame - if set we use

|

||||

mutliple files export mode.

|

||||

multiple files export mode.

|

||||

|

||||

Returns:

|

||||

str: formatted path.

|

||||

|

|

|

|||

|

|

@ -331,7 +331,7 @@ class MayaSubmitMuster(pyblish.api.InstancePlugin):

|

|||

# but dispatcher (Server) and not render clients. Render clients

|

||||

# inherit environment from publisher including PATH, so there's

|

||||

# no problem finding PYPE, but there is now way (as far as I know)

|

||||

# to set environment dynamically for dispatcher. Therefor this hack.

|

||||

# to set environment dynamically for dispatcher. Therefore this hack.

|

||||

args = [muster_python,

|

||||

_get_script().replace('\\', '\\\\'),

|

||||

"--paths",

|

||||

|

|

@ -478,7 +478,7 @@ class MayaSubmitMuster(pyblish.api.InstancePlugin):

|

|||

# such that proper initialisation happens the same

|

||||

# way as it does on a local machine.

|

||||

# TODO(marcus): This won't work if the slaves don't

|

||||

# have accesss to these paths, such as if slaves are

|

||||

# have access to these paths, such as if slaves are

|

||||

# running Linux and the submitter is on Windows.

|

||||

"PYTHONPATH",

|

||||

"PATH",

|

||||

|

|

|

|||

|

|

@ -78,7 +78,7 @@ class GetOverlappingUVs(object):

|

|||

if len(uarray) == 0 or len(varray) == 0:

|

||||

return (False, None, None)

|

||||

|

||||

# loop throught all vertices to construct edges/rays

|

||||

# loop through all vertices to construct edges/rays

|

||||

u = uarray[-1]

|

||||

v = varray[-1]

|

||||

for i in xrange(len(uarray)): # noqa: F821

|

||||

|

|

|

|||

|

|

@ -9,7 +9,7 @@ class ValidateRigContents(pyblish.api.InstancePlugin):

|

|||

|

||||

Every rig must contain at least two object sets:

|

||||

"controls_SET" - Set of all animatable controls

|

||||

"out_SET" - Set of all cachable meshes

|

||||

"out_SET" - Set of all cacheable meshes

|

||||

|

||||

"""

|

||||

|

||||

|

|

|

|||

|

|

@ -10,7 +10,7 @@ import re

|

|||

class ValidateUnrealStaticmeshName(pyblish.api.InstancePlugin):

|

||||

"""Validate name of Unreal Static Mesh

|

||||

|

||||

Unreals naming convention states that staticMesh sould start with `SM`

|

||||

Unreals naming convention states that staticMesh should start with `SM`

|

||||

prefix - SM_[Name]_## (Eg. SM_sube_01). This plugin also validates other

|

||||

types of meshes - collision meshes:

|

||||

|

||||

|

|

|

|||

|

|

@ -54,7 +54,7 @@ def install():

|

|||

''' Installing all requarements for Nuke host

|

||||

'''

|

||||

|

||||

# remove all registred callbacks form avalon.nuke

|

||||

# remove all registered callbacks form avalon.nuke

|

||||

from avalon import pipeline

|

||||

pipeline._registered_event_handlers.clear()

|

||||

|

||||

|

|

|

|||

|

|

@ -141,7 +141,7 @@ def check_inventory_versions():

|

|||

max_version = max(versions)

|

||||

|

||||

# check the available version and do match

|

||||

# change color of node if not max verion

|

||||

# change color of node if not max version

|

||||

if version.get("name") not in [max_version]:

|

||||

node["tile_color"].setValue(int("0xd84f20ff", 16))

|

||||

else:

|

||||

|

|

@ -236,10 +236,10 @@ def get_render_path(node):

|

|||

|

||||

|

||||

def format_anatomy(data):

|

||||

''' Helping function for formating of anatomy paths

|

||||

''' Helping function for formatting of anatomy paths

|

||||

|

||||

Arguments:

|

||||

data (dict): dictionary with attributes used for formating

|

||||

data (dict): dictionary with attributes used for formatting

|

||||

|

||||

Return:

|

||||

path (str)

|

||||

|

|

@ -462,7 +462,7 @@ def create_write_node(name, data, input=None, prenodes=None,

|

|||

else:

|

||||

now_node.setInput(0, prev_node)

|

||||

|

||||

# swith actual node to previous

|

||||

# switch actual node to previous

|

||||

prev_node = now_node

|

||||

|

||||

# creating write node

|

||||

|

|

@ -474,7 +474,7 @@ def create_write_node(name, data, input=None, prenodes=None,

|

|||

# connect to previous node

|

||||

now_node.setInput(0, prev_node)

|

||||

|

||||

# swith actual node to previous

|

||||

# switch actual node to previous

|

||||

prev_node = now_node

|

||||

|

||||

now_node = nuke.createNode("Output", "name Output1")

|

||||

|

|

@ -516,7 +516,7 @@ def create_write_node(name, data, input=None, prenodes=None,

|

|||

GN.addKnob(knob)

|

||||

else:

|

||||

if "___" in _k_name:

|

||||

# add devider

|

||||

# add divider

|

||||

GN.addKnob(nuke.Text_Knob(""))

|

||||

else:

|

||||

# add linked knob by _k_name

|

||||

|

|

@ -725,7 +725,7 @@ class WorkfileSettings(object):

|

|||

for i, n in enumerate(copy_inputs):

|

||||

nv.setInput(i, n)

|

||||

|

||||

# set coppied knobs

|

||||

# set copied knobs

|

||||

for k, v in copy_knobs.items():

|

||||

print(k, v)

|

||||

nv[k].setValue(v)

|

||||

|

|

@ -862,7 +862,7 @@ class WorkfileSettings(object):

|

|||

def set_reads_colorspace(self, read_clrs_inputs):

|

||||

""" Setting colorspace to Read nodes

|

||||

|

||||

Looping trought all read nodes and tries to set colorspace based

|

||||

Looping through all read nodes and tries to set colorspace based

|

||||

on regex rules in presets

|

||||

"""

|

||||

changes = {}

|

||||

|

|

@ -871,7 +871,7 @@ class WorkfileSettings(object):

|

|||

if n.Class() != "Read":

|

||||

continue

|

||||

|

||||

# check if any colorspace presets for read is mathing

|

||||

# check if any colorspace presets for read is matching

|

||||

preset_clrsp = None

|

||||

|

||||

for input in read_clrs_inputs:

|

||||

|

|

@ -1013,7 +1013,7 @@ class WorkfileSettings(object):

|

|||

|

||||

def reset_resolution(self):

|

||||

"""Set resolution to project resolution."""

|

||||

log.info("Reseting resolution")

|

||||

log.info("Resetting resolution")

|

||||

project = io.find_one({"type": "project"})

|

||||

asset = api.Session["AVALON_ASSET"]

|

||||

asset = io.find_one({"name": asset, "type": "asset"})

|

||||

|

|

|

|||

|

|

@ -209,7 +209,7 @@ class ExporterReview(object):

|

|||

nuke_imageio = opnlib.get_nuke_imageio_settings()

|

||||

|

||||

# TODO: this is only securing backward compatibility lets remove

|

||||

# this once all projects's anotomy are upated to newer config

|

||||

# this once all projects's anotomy are updated to newer config

|

||||

if "baking" in nuke_imageio.keys():

|

||||

return nuke_imageio["baking"]["viewerProcess"]

|

||||

else:

|

||||

|

|

@ -477,7 +477,7 @@ class ExporterReviewMov(ExporterReview):

|

|||

write_node["file_type"].setValue(str(self.ext))

|

||||

|

||||

# Knobs `meta_codec` and `mov64_codec` are not available on centos.

|

||||

# TODO should't this come from settings on outputs?

|

||||

# TODO shouldn't this come from settings on outputs?

|

||||

try:

|

||||

write_node["meta_codec"].setValue("ap4h")

|

||||

except Exception:

|

||||

|

|

|

|||

|

|

@ -5,9 +5,9 @@ from openpype.api import resources

|

|||

|

||||

|

||||

def set_context_favorites(favorites=None):

|

||||

""" Addig favorite folders to nuke's browser

|

||||

""" Adding favorite folders to nuke's browser

|

||||

|

||||

Argumets:

|

||||

Arguments:

|

||||

favorites (dict): couples of {name:path}

|

||||

"""

|

||||

favorites = favorites or {}

|

||||

|

|

@ -51,7 +51,7 @@ def gizmo_is_nuke_default(gizmo):

|

|||

def bake_gizmos_recursively(in_group=nuke.Root()):

|

||||

"""Converting a gizmo to group

|

||||

|

||||

Argumets:

|

||||

Arguments:

|

||||

is_group (nuke.Node)[optonal]: group node or all nodes

|

||||

"""

|

||||

# preserve selection after all is done

|

||||

|

|

|

|||

|

|

@ -48,7 +48,7 @@ class CreateGizmo(plugin.PypeCreator):

|

|||

gizmo_node["name"].setValue("{}_GZM".format(self.name))

|

||||

gizmo_node["tile_color"].setValue(int(self.node_color, 16))

|

||||

|

||||

# add sticky node wit guide

|

||||

# add sticky node with guide

|

||||

with gizmo_node:

|

||||

sticky = nuke.createNode("StickyNote")

|

||||

sticky["label"].setValue(

|

||||

|

|

@ -71,7 +71,7 @@ class CreateGizmo(plugin.PypeCreator):

|

|||

gizmo_node["name"].setValue("{}_GZM".format(self.name))

|

||||

gizmo_node["tile_color"].setValue(int(self.node_color, 16))

|

||||

|

||||

# add sticky node wit guide

|

||||

# add sticky node with guide

|

||||

with gizmo_node:

|

||||

sticky = nuke.createNode("StickyNote")

|

||||

sticky["label"].setValue(

|

||||

|

|

|

|||

|

|

@ -235,7 +235,7 @@ class LoadBackdropNodes(api.Loader):

|

|||

else:

|

||||

GN["tile_color"].setValue(int(self.node_color, 16))

|

||||

|

||||

self.log.info("udated to version: {}".format(version.get("name")))

|

||||

self.log.info("updated to version: {}".format(version.get("name")))

|

||||

|

||||

return update_container(GN, data_imprint)

|

||||

|

||||

|

|

|

|||

|

|

@ -156,7 +156,7 @@ class AlembicCameraLoader(api.Loader):

|

|||

# color node by correct color by actual version

|

||||

self.node_version_color(version, camera_node)

|

||||

|

||||

self.log.info("udated to version: {}".format(version.get("name")))

|

||||

self.log.info("updated to version: {}".format(version.get("name")))

|

||||

|

||||

return update_container(camera_node, data_imprint)

|

||||

|

||||

|

|

|

|||

|

|

@ -270,7 +270,7 @@ class LoadClip(plugin.NukeLoader):

|

|||

read_node,

|

||||

updated_dict

|

||||

)

|

||||

self.log.info("udated to version: {}".format(version.get("name")))

|

||||

self.log.info("updated to version: {}".format(version.get("name")))

|

||||

|

||||

if version_data.get("retime", None):

|

||||

self._make_retimes(read_node, version_data)

|

||||

|

|

@ -302,7 +302,7 @@ class LoadClip(plugin.NukeLoader):

|

|||

self._loader_shift(read_node, start_at_workfile)

|

||||

|

||||

def _make_retimes(self, parent_node, version_data):

|

||||

''' Create all retime and timewarping nodes with coppied animation '''

|

||||

''' Create all retime and timewarping nodes with copied animation '''

|

||||

speed = version_data.get('speed', 1)

|

||||

time_warp_nodes = version_data.get('timewarps', [])

|

||||

last_node = None

|

||||

|

|

|

|||

|

|

@ -253,7 +253,7 @@ class LoadEffects(api.Loader):

|

|||

else:

|

||||

GN["tile_color"].setValue(int("0x3469ffff", 16))

|

||||

|

||||

self.log.info("udated to version: {}".format(version.get("name")))

|

||||

self.log.info("updated to version: {}".format(version.get("name")))

|

||||

|

||||

def connect_read_node(self, group_node, asset, subset):

|

||||

"""

|

||||

|

|

@ -314,7 +314,7 @@ class LoadEffects(api.Loader):

|

|||

def byteify(self, input):

|

||||

"""

|

||||

Converts unicode strings to strings

|

||||

It goes trought all dictionary

|

||||

It goes through all dictionary

|

||||

|

||||

Arguments:

|

||||

input (dict/str): input

|

||||

|

|

|

|||

|

|

@ -258,7 +258,7 @@ class LoadEffectsInputProcess(api.Loader):

|

|||

else:

|

||||

GN["tile_color"].setValue(int("0x3469ffff", 16))

|

||||

|

||||

self.log.info("udated to version: {}".format(version.get("name")))

|

||||

self.log.info("updated to version: {}".format(version.get("name")))

|

||||

|

||||

def connect_active_viewer(self, group_node):

|

||||

"""

|

||||

|

|

@ -331,7 +331,7 @@ class LoadEffectsInputProcess(api.Loader):

|

|||

def byteify(self, input):

|

||||

"""

|

||||

Converts unicode strings to strings

|

||||

It goes trought all dictionary

|

||||

It goes through all dictionary

|

||||

|

||||

Arguments:

|

||||

input (dict/str): input

|

||||

|

|

|

|||

|

|

@ -149,7 +149,7 @@ class LoadGizmo(api.Loader):

|

|||

else:

|

||||

GN["tile_color"].setValue(int(self.node_color, 16))

|

||||

|

||||

self.log.info("udated to version: {}".format(version.get("name")))

|

||||

self.log.info("updated to version: {}".format(version.get("name")))

|

||||

|

||||

return update_container(GN, data_imprint)

|

||||

|

||||

|

|

|

|||

|

|

@ -155,7 +155,7 @@ class LoadGizmoInputProcess(api.Loader):

|

|||

else:

|

||||

GN["tile_color"].setValue(int(self.node_color, 16))

|

||||

|

||||

self.log.info("udated to version: {}".format(version.get("name")))

|

||||

self.log.info("updated to version: {}".format(version.get("name")))

|

||||

|

||||

return update_container(GN, data_imprint)

|

||||

|

||||

|

|

@ -210,7 +210,7 @@ class LoadGizmoInputProcess(api.Loader):

|

|||

def byteify(self, input):

|

||||

"""

|

||||

Converts unicode strings to strings

|

||||

It goes trought all dictionary

|

||||

It goes through all dictionary

|

||||

|

||||

Arguments:

|

||||

input (dict/str): input

|

||||

|

|

|

|||

|

|

@ -231,7 +231,7 @@ class LoadImage(api.Loader):

|

|||

node,

|

||||

updated_dict

|

||||

)

|

||||

self.log.info("udated to version: {}".format(version.get("name")))

|

||||

self.log.info("updated to version: {}".format(version.get("name")))

|

||||

|

||||

def remove(self, container):

|

||||

|

||||

|

|

|

|||

|

|

@ -156,7 +156,7 @@ class AlembicModelLoader(api.Loader):

|

|||

# color node by correct color by actual version

|

||||

self.node_version_color(version, model_node)

|

||||

|

||||

self.log.info("udated to version: {}".format(version.get("name")))

|

||||

self.log.info("updated to version: {}".format(version.get("name")))

|

||||

|

||||

return update_container(model_node, data_imprint)

|

||||

|

||||

|

|

|

|||

|

|

@ -67,7 +67,7 @@ class LinkAsGroup(api.Loader):

|

|||

P["useOutput"].setValue(True)

|

||||

|

||||

with P:

|

||||

# iterate trough all nodes in group node and find pype writes

|

||||

# iterate through all nodes in group node and find pype writes

|

||||

writes = [n.name() for n in nuke.allNodes()

|

||||

if n.Class() == "Group"

|

||||

if get_avalon_knob_data(n)]

|

||||

|

|

@ -152,7 +152,7 @@ class LinkAsGroup(api.Loader):

|

|||

else:

|

||||

node["tile_color"].setValue(int("0xff0ff0ff", 16))

|

||||

|

||||

self.log.info("udated to version: {}".format(version.get("name")))

|

||||

self.log.info("updated to version: {}".format(version.get("name")))

|

||||

|

||||

def remove(self, container):

|

||||

from avalon.nuke import viewer_update_and_undo_stop

|

||||

|

|

|

|||

|

|

@ -113,7 +113,7 @@ class ExtractCamera(openpype.api.Extractor):

|

|||

|

||||

|

||||

def bakeCameraWithAxeses(camera_node, output_range):

|

||||

""" Baking all perent hiearchy of axeses into camera

|

||||

""" Baking all perent hierarchy of axeses into camera

|

||||

with transposition onto word XYZ coordinance

|

||||

"""

|

||||

bakeFocal = False

|

||||

|

|

|

|||

|

|

@ -4,7 +4,7 @@ from avalon.nuke import maintained_selection

|

|||

|

||||

|

||||

class CreateOutputNode(pyblish.api.ContextPlugin):

|

||||

"""Adding output node for each ouput write node

|

||||

"""Adding output node for each output write node

|

||||

So when latly user will want to Load .nk as LifeGroup or Precomp

|

||||

Nuke will not complain about missing Output node

|

||||

"""

|

||||

|

|

|

|||

|

|

@ -49,7 +49,7 @@ class ExtractReviewDataMov(openpype.api.Extractor):

|

|||

|

||||

# test if family found in context

|

||||

test_families = any([

|

||||

# first if exact family set is mathing

|

||||

# first if exact family set is matching

|

||||

# make sure only interesetion of list is correct

|

||||

bool(set(families).intersection(f_families)),

|

||||

# and if famiies are set at all

|

||||

|

|

|

|||

|

|

@ -15,7 +15,7 @@ class IncrementScriptVersion(pyblish.api.ContextPlugin):

|

|||

def process(self, context):

|

||||

|

||||

assert all(result["success"] for result in context.data["results"]), (

|

||||

"Publishing not succesfull so version is not increased.")

|

||||

"Publishing not successful so version is not increased.")

|

||||

|

||||

from openpype.lib import version_up

|

||||

path = context.data["currentFile"]

|

||||

|

|

|

|||

|

|

@ -3,7 +3,7 @@ import pyblish.api

|

|||

|

||||

|

||||

class RemoveOutputNode(pyblish.api.ContextPlugin):

|

||||

"""Removing output node for each ouput write node

|

||||

"""Removing output node for each output write node

|

||||

|

||||

"""

|

||||

label = 'Output Node Remove'

|

||||

|

|

|

|||

|

|

@ -48,7 +48,7 @@ class SelectCenterInNodeGraph(pyblish.api.Action):

|

|||

@pyblish.api.log

|

||||

class ValidateBackdrop(pyblish.api.InstancePlugin):

|

||||