mirror of

https://github.com/ynput/ayon-core.git

synced 2025-12-24 12:54:40 +01:00

Merge branch 'develop' into release/3.15.x

This commit is contained in:

commit

258acdfca1

119 changed files with 8827 additions and 2033 deletions

42

.github/workflows/prerelease.yml

vendored

42

.github/workflows/prerelease.yml

vendored

|

|

@ -37,27 +37,27 @@ jobs:

|

|||

|

||||

echo ::set-output name=next_tag::$RESULT

|

||||

|

||||

- name: "✏️ Generate full changelog"

|

||||

if: steps.version_type.outputs.type != 'skip'

|

||||

id: generate-full-changelog

|

||||

uses: heinrichreimer/github-changelog-generator-action@v2.2

|

||||

with:

|

||||

token: ${{ secrets.ADMIN_TOKEN }}

|

||||

addSections: '{"documentation":{"prefix":"### 📖 Documentation","labels":["type: documentation"]},"tests":{"prefix":"### ✅ Testing","labels":["tests"]},"feature":{"prefix":"**🆕 New features**", "labels":["type: feature"]},"breaking":{"prefix":"**💥 Breaking**", "labels":["breaking"]},"enhancements":{"prefix":"**🚀 Enhancements**", "labels":["type: enhancement"]},"bugs":{"prefix":"**🐛 Bug fixes**", "labels":["type: bug"]},"deprecated":{"prefix":"**⚠️ Deprecations**", "labels":["depreciated"]}, "refactor":{"prefix":"**🔀 Refactored code**", "labels":["refactor"]}}'

|

||||

issues: false

|

||||

issuesWoLabels: false

|

||||

sinceTag: "3.0.0"

|

||||

maxIssues: 100

|

||||

pullRequests: true

|

||||

prWoLabels: false

|

||||

author: false

|

||||

unreleased: true

|

||||

compareLink: true

|

||||

stripGeneratorNotice: true

|

||||

verbose: true

|

||||

unreleasedLabel: ${{ steps.version.outputs.next_tag }}

|

||||

excludeTagsRegex: "CI/.+"

|

||||

releaseBranch: "main"

|

||||

# - name: "✏️ Generate full changelog"

|

||||

# if: steps.version_type.outputs.type != 'skip'

|

||||

# id: generate-full-changelog

|

||||

# uses: heinrichreimer/github-changelog-generator-action@v2.3

|

||||

# with:

|

||||

# token: ${{ secrets.ADMIN_TOKEN }}

|

||||

# addSections: '{"documentation":{"prefix":"### 📖 Documentation","labels":["type: documentation"]},"tests":{"prefix":"### ✅ Testing","labels":["tests"]},"feature":{"prefix":"**🆕 New features**", "labels":["type: feature"]},"breaking":{"prefix":"**💥 Breaking**", "labels":["breaking"]},"enhancements":{"prefix":"**🚀 Enhancements**", "labels":["type: enhancement"]},"bugs":{"prefix":"**🐛 Bug fixes**", "labels":["type: bug"]},"deprecated":{"prefix":"**⚠️ Deprecations**", "labels":["depreciated"]}, "refactor":{"prefix":"**🔀 Refactored code**", "labels":["refactor"]}}'

|

||||

# issues: false

|

||||

# issuesWoLabels: false

|

||||

# sinceTag: "3.12.0"

|

||||

# maxIssues: 100

|

||||

# pullRequests: true

|

||||

# prWoLabels: false

|

||||

# author: false

|

||||

# unreleased: true

|

||||

# compareLink: true

|

||||

# stripGeneratorNotice: true

|

||||

# verbose: true

|

||||

# unreleasedLabel: ${{ steps.version.outputs.next_tag }}

|

||||

# excludeTagsRegex: "CI/.+"

|

||||

# releaseBranch: "main"

|

||||

|

||||

- name: "🖨️ Print changelog to console"

|

||||

if: steps.version_type.outputs.type != 'skip'

|

||||

|

|

|

|||

42

.github/workflows/release.yml

vendored

42

.github/workflows/release.yml

vendored

|

|

@ -33,27 +33,27 @@ jobs:

|

|||

echo ::set-output name=last_release::$LASTRELEASE

|

||||

echo ::set-output name=release_tag::$RESULT

|

||||

|

||||

- name: "✏️ Generate full changelog"

|

||||

if: steps.version.outputs.release_tag != 'skip'

|

||||

id: generate-full-changelog

|

||||

uses: heinrichreimer/github-changelog-generator-action@v2.2

|

||||

with:

|

||||

token: ${{ secrets.ADMIN_TOKEN }}

|

||||

addSections: '{"documentation":{"prefix":"### 📖 Documentation","labels":["type: documentation"]},"tests":{"prefix":"### ✅ Testing","labels":["tests"]},"feature":{"prefix":"**🆕 New features**", "labels":["type: feature"]},"breaking":{"prefix":"**💥 Breaking**", "labels":["breaking"]},"enhancements":{"prefix":"**🚀 Enhancements**", "labels":["type: enhancement"]},"bugs":{"prefix":"**🐛 Bug fixes**", "labels":["type: bug"]},"deprecated":{"prefix":"**⚠️ Deprecations**", "labels":["depreciated"]}, "refactor":{"prefix":"**🔀 Refactored code**", "labels":["refactor"]}}'

|

||||

issues: false

|

||||

issuesWoLabels: false

|

||||

sinceTag: "3.0.0"

|

||||

maxIssues: 100

|

||||

pullRequests: true

|

||||

prWoLabels: false

|

||||

author: false

|

||||

unreleased: true

|

||||

compareLink: true

|

||||

stripGeneratorNotice: true

|

||||

verbose: true

|

||||

futureRelease: ${{ steps.version.outputs.release_tag }}

|

||||

excludeTagsRegex: "CI/.+"

|

||||

releaseBranch: "main"

|

||||

# - name: "✏️ Generate full changelog"

|

||||

# if: steps.version.outputs.release_tag != 'skip'

|

||||

# id: generate-full-changelog

|

||||

# uses: heinrichreimer/github-changelog-generator-action@v2.3

|

||||

# with:

|

||||

# token: ${{ secrets.ADMIN_TOKEN }}

|

||||

# addSections: '{"documentation":{"prefix":"### 📖 Documentation","labels":["type: documentation"]},"tests":{"prefix":"### ✅ Testing","labels":["tests"]},"feature":{"prefix":"**🆕 New features**", "labels":["type: feature"]},"breaking":{"prefix":"**💥 Breaking**", "labels":["breaking"]},"enhancements":{"prefix":"**🚀 Enhancements**", "labels":["type: enhancement"]},"bugs":{"prefix":"**🐛 Bug fixes**", "labels":["type: bug"]},"deprecated":{"prefix":"**⚠️ Deprecations**", "labels":["depreciated"]}, "refactor":{"prefix":"**🔀 Refactored code**", "labels":["refactor"]}}'

|

||||

# issues: false

|

||||

# issuesWoLabels: false

|

||||

# sinceTag: "3.12.0"

|

||||

# maxIssues: 100

|

||||

# pullRequests: true

|

||||

# prWoLabels: false

|

||||

# author: false

|

||||

# unreleased: true

|

||||

# compareLink: true

|

||||

# stripGeneratorNotice: true

|

||||

# verbose: true

|

||||

# futureRelease: ${{ steps.version.outputs.release_tag }}

|

||||

# excludeTagsRegex: "CI/.+"

|

||||

# releaseBranch: "main"

|

||||

|

||||

- name: 💾 Commit and Tag

|

||||

id: git_commit

|

||||

|

|

|

|||

2

.gitignore

vendored

2

.gitignore

vendored

|

|

@ -110,3 +110,5 @@ tools/run_eventserver.*

|

|||

|

||||

# Developer tools

|

||||

tools/dev_*

|

||||

|

||||

.github_changelog_generator

|

||||

|

|

|

|||

1759

CHANGELOG.md

1759

CHANGELOG.md

File diff suppressed because it is too large

Load diff

1814

HISTORY.md

1814

HISTORY.md

File diff suppressed because it is too large

Load diff

|

|

@ -11,7 +11,6 @@ from .lib import (

|

|||

PypeLogger,

|

||||

Logger,

|

||||

Anatomy,

|

||||

config,

|

||||

execute,

|

||||

run_subprocess,

|

||||

version_up,

|

||||

|

|

@ -72,7 +71,6 @@ __all__ = [

|

|||

"PypeLogger",

|

||||

"Logger",

|

||||

"Anatomy",

|

||||

"config",

|

||||

"execute",

|

||||

"get_default_components",

|

||||

"ApplicationManager",

|

||||

|

|

|

|||

|

|

@ -277,6 +277,13 @@ def projectmanager():

|

|||

PypeCommands().launch_project_manager()

|

||||

|

||||

|

||||

@main.command(context_settings={"ignore_unknown_options": True})

|

||||

def publish_report_viewer():

|

||||

from openpype.tools.publisher.publish_report_viewer import main

|

||||

|

||||

sys.exit(main())

|

||||

|

||||

|

||||

@main.command()

|

||||

@click.argument("output_path")

|

||||

@click.option("--project", help="Define project context")

|

||||

|

|

|

|||

|

|

@ -3,7 +3,7 @@ from typing import List

|

|||

import bpy

|

||||

|

||||

import pyblish.api

|

||||

import openpype.api

|

||||

|

||||

import openpype.hosts.blender.api.action

|

||||

from openpype.pipeline.publish import ValidateContentsOrder

|

||||

|

||||

|

|

|

|||

|

|

@ -3,14 +3,15 @@ from typing import List

|

|||

import bpy

|

||||

|

||||

import pyblish.api

|

||||

import openpype.api

|

||||

|

||||

from openpype.pipeline.publish import ValidateContentsOrder

|

||||

import openpype.hosts.blender.api.action

|

||||

|

||||

|

||||

class ValidateMeshHasUvs(pyblish.api.InstancePlugin):

|

||||

"""Validate that the current mesh has UV's."""

|

||||

|

||||

order = openpype.api.ValidateContentsOrder

|

||||

order = ValidateContentsOrder

|

||||

hosts = ["blender"]

|

||||

families = ["model"]

|

||||

category = "geometry"

|

||||

|

|

|

|||

|

|

@ -3,14 +3,15 @@ from typing import List

|

|||

import bpy

|

||||

|

||||

import pyblish.api

|

||||

import openpype.api

|

||||

|

||||

from openpype.pipeline.publish import ValidateContentsOrder

|

||||

import openpype.hosts.blender.api.action

|

||||

|

||||

|

||||

class ValidateMeshNoNegativeScale(pyblish.api.Validator):

|

||||

"""Ensure that meshes don't have a negative scale."""

|

||||

|

||||

order = openpype.api.ValidateContentsOrder

|

||||

order = ValidateContentsOrder

|

||||

hosts = ["blender"]

|

||||

families = ["model"]

|

||||

category = "geometry"

|

||||

|

|

|

|||

|

|

@ -3,7 +3,7 @@ from typing import List

|

|||

import bpy

|

||||

|

||||

import pyblish.api

|

||||

import openpype.api

|

||||

|

||||

import openpype.hosts.blender.api.action

|

||||

from openpype.pipeline.publish import ValidateContentsOrder

|

||||

|

||||

|

|

|

|||

|

|

@ -4,7 +4,7 @@ import mathutils

|

|||

import bpy

|

||||

|

||||

import pyblish.api

|

||||

import openpype.api

|

||||

|

||||

import openpype.hosts.blender.api.action

|

||||

from openpype.pipeline.publish import ValidateContentsOrder

|

||||

|

||||

|

|

|

|||

|

|

@ -1,7 +1,7 @@

|

|||

"""Host API required Work Files tool"""

|

||||

|

||||

import os

|

||||

from openpype.api import Logger

|

||||

from openpype.lib import Logger

|

||||

# from .. import (

|

||||

# get_project_manager,

|

||||

# get_current_project

|

||||

|

|

|

|||

|

|

@ -3,16 +3,17 @@ import json

|

|||

import tempfile

|

||||

import contextlib

|

||||

import socket

|

||||

from pprint import pformat

|

||||

|

||||

from openpype.lib import (

|

||||

PreLaunchHook,

|

||||

get_openpype_username

|

||||

get_openpype_username,

|

||||

run_subprocess,

|

||||

)

|

||||

from openpype.lib.applications import (

|

||||

ApplicationLaunchFailed

|

||||

)

|

||||

from openpype.hosts import flame as opflame

|

||||

import openpype

|

||||

from pprint import pformat

|

||||

|

||||

|

||||

class FlamePrelaunch(PreLaunchHook):

|

||||

|

|

@ -127,7 +128,6 @@ class FlamePrelaunch(PreLaunchHook):

|

|||

except OSError as exc:

|

||||

self.log.warning("Not able to open files: {}".format(exc))

|

||||

|

||||

|

||||

def _get_flame_fps(self, fps_num):

|

||||

fps_table = {

|

||||

float(23.976): "23.976 fps",

|

||||

|

|

@ -179,7 +179,7 @@ class FlamePrelaunch(PreLaunchHook):

|

|||

"env": self.launch_context.env

|

||||

}

|

||||

|

||||

openpype.api.run_subprocess(args, **process_kwargs)

|

||||

run_subprocess(args, **process_kwargs)

|

||||

|

||||

# process returned json file to pass launch args

|

||||

return_json_data = open(tmp_json_path).read()

|

||||

|

|

|

|||

|

|

@ -44,11 +44,26 @@ INVENTORY_PATH = os.path.join(PLUGINS_DIR, "inventory")

|

|||

|

||||

class FusionLogHandler(logging.Handler):

|

||||

# Keep a reference to fusion's Print function (Remote Object)

|

||||

_print = None

|

||||

|

||||

@property

|

||||

def print(self):

|

||||

if self._print is not None:

|

||||

# Use cached

|

||||

return self._print

|

||||

|

||||

_print = getattr(sys.modules["__main__"], "fusion").Print

|

||||

if _print is None:

|

||||

# Backwards compatibility: Print method on Fusion instance was

|

||||

# added around Fusion 17.4 and wasn't available on PyRemote Object

|

||||

# before

|

||||

_print = get_current_comp().Print

|

||||

self._print = _print

|

||||

return _print

|

||||

|

||||

def emit(self, record):

|

||||

entry = self.format(record)

|

||||

self._print(entry)

|

||||

self.print(entry)

|

||||

|

||||

|

||||

def install():

|

||||

|

|

|

|||

|

|

@ -1,8 +1,9 @@

|

|||

# -*- coding: utf-8 -*-

|

||||

import openpype.api

|

||||

import pyblish.api

|

||||

import hou

|

||||

|

||||

from openpype.pipeline.publish import RepairAction

|

||||

|

||||

|

||||

class ValidateWorkfilePaths(pyblish.api.InstancePlugin):

|

||||

"""Validate workfile paths so they are absolute."""

|

||||

|

|

@ -11,7 +12,7 @@ class ValidateWorkfilePaths(pyblish.api.InstancePlugin):

|

|||

families = ["workfile"]

|

||||

hosts = ["houdini"]

|

||||

label = "Validate Workfile Paths"

|

||||

actions = [openpype.api.RepairAction]

|

||||

actions = [RepairAction]

|

||||

optional = True

|

||||

|

||||

node_types = ["file", "alembic"]

|

||||

|

|

|

|||

|

|

@ -2459,182 +2459,120 @@ def bake_to_world_space(nodes,

|

|||

|

||||

|

||||

def load_capture_preset(data=None):

|

||||

"""Convert OpenPype Extract Playblast settings to `capture` arguments

|

||||

|

||||

Input data is the settings from:

|

||||

`project_settings/maya/publish/ExtractPlayblast/capture_preset`

|

||||

|

||||

Args:

|

||||

data (dict): Capture preset settings from OpenPype settings

|

||||

|

||||

Returns:

|

||||

dict: `capture.capture` compatible keyword arguments

|

||||

|

||||

"""

|

||||

|

||||

import capture

|

||||

|

||||

preset = data

|

||||

|

||||

options = dict()

|

||||

viewport_options = dict()

|

||||

viewport2_options = dict()

|

||||

camera_options = dict()

|

||||

|

||||

# CODEC

|

||||

id = 'Codec'

|

||||

for key in preset[id]:

|

||||

options[str(key)] = preset[id][key]

|

||||

# Straight key-value match from settings to capture arguments

|

||||

options.update(data["Codec"])

|

||||

options.update(data["Generic"])

|

||||

options.update(data["Resolution"])

|

||||

|

||||

# GENERIC

|

||||

id = 'Generic'

|

||||

for key in preset[id]:

|

||||

options[str(key)] = preset[id][key]

|

||||

|

||||

# RESOLUTION

|

||||

id = 'Resolution'

|

||||

options['height'] = preset[id]['height']

|

||||

options['width'] = preset[id]['width']

|

||||

camera_options.update(data['Camera Options'])

|

||||

viewport_options.update(data["Renderer"])

|

||||

|

||||

# DISPLAY OPTIONS

|

||||

id = 'Display Options'

|

||||

disp_options = {}

|

||||

for key in preset[id]:

|

||||

for key, value in data['Display Options'].items():

|

||||

if key.startswith('background'):

|

||||

disp_options[key] = preset['Display Options'][key]

|

||||

if len(disp_options[key]) == 4:

|

||||

disp_options[key][0] = (float(disp_options[key][0])/255)

|

||||

disp_options[key][1] = (float(disp_options[key][1])/255)

|

||||

disp_options[key][2] = (float(disp_options[key][2])/255)

|

||||

disp_options[key].pop()

|

||||

# Convert background, backgroundTop, backgroundBottom colors

|

||||

if len(value) == 4:

|

||||

# Ignore alpha + convert RGB to float

|

||||

value = [

|

||||

float(value[0]) / 255,

|

||||

float(value[1]) / 255,

|

||||

float(value[2]) / 255

|

||||

]

|

||||

disp_options[key] = value

|

||||

else:

|

||||

disp_options['displayGradient'] = True

|

||||

|

||||

options['display_options'] = disp_options

|

||||

|

||||

# VIEWPORT OPTIONS

|

||||

temp_options = {}

|

||||

id = 'Renderer'

|

||||

for key in preset[id]:

|

||||

temp_options[str(key)] = preset[id][key]

|

||||

# Viewport Options has a mixture of Viewport2 Options and Viewport Options

|

||||

# to pass along to capture. So we'll need to differentiate between the two

|

||||

VIEWPORT2_OPTIONS = {

|

||||

"textureMaxResolution",

|

||||

"renderDepthOfField",

|

||||

"ssaoEnable",

|

||||

"ssaoSamples",

|

||||

"ssaoAmount",

|

||||

"ssaoRadius",

|

||||

"ssaoFilterRadius",

|

||||

"hwFogStart",

|

||||

"hwFogEnd",

|

||||

"hwFogAlpha",

|

||||

"hwFogFalloff",

|

||||

"hwFogColorR",

|

||||

"hwFogColorG",

|

||||

"hwFogColorB",

|

||||

"hwFogDensity",

|

||||

"motionBlurEnable",

|

||||

"motionBlurSampleCount",

|

||||

"motionBlurShutterOpenFraction",

|

||||

"lineAAEnable"

|

||||

}

|

||||

for key, value in data['Viewport Options'].items():

|

||||

|

||||

temp_options2 = {}

|

||||

id = 'Viewport Options'

|

||||

for key in preset[id]:

|

||||

# There are some keys we want to ignore

|

||||

if key in {"override_viewport_options", "high_quality"}:

|

||||

continue

|

||||

|

||||

# First handle special cases where we do value conversion to

|

||||

# separate option values

|

||||

if key == 'textureMaxResolution':

|

||||

if preset[id][key] > 0:

|

||||

temp_options2['textureMaxResolution'] = preset[id][key]

|

||||

temp_options2['enableTextureMaxRes'] = True

|

||||

temp_options2['textureMaxResMode'] = 1

|

||||

viewport2_options['textureMaxResolution'] = value

|

||||

if value > 0:

|

||||

viewport2_options['enableTextureMaxRes'] = True

|

||||

viewport2_options['textureMaxResMode'] = 1

|

||||

else:

|

||||

temp_options2['textureMaxResolution'] = preset[id][key]

|

||||

temp_options2['enableTextureMaxRes'] = False

|

||||

temp_options2['textureMaxResMode'] = 0

|

||||

viewport2_options['enableTextureMaxRes'] = False

|

||||

viewport2_options['textureMaxResMode'] = 0

|

||||

|

||||

if key == 'multiSample':

|

||||

if preset[id][key] > 0:

|

||||

temp_options2['multiSampleEnable'] = True

|

||||

temp_options2['multiSampleCount'] = preset[id][key]

|

||||

elif key == 'multiSample':

|

||||

viewport2_options['multiSampleEnable'] = value > 0

|

||||

viewport2_options['multiSampleCount'] = value

|

||||

|

||||

elif key == 'alphaCut':

|

||||

viewport2_options['transparencyAlgorithm'] = 5

|

||||

viewport2_options['transparencyQuality'] = 1

|

||||

|

||||

elif key == 'hwFogFalloff':

|

||||

# Settings enum value string to integer

|

||||

viewport2_options['hwFogFalloff'] = int(value)

|

||||

|

||||

# Then handle Viewport 2.0 Options

|

||||

elif key in VIEWPORT2_OPTIONS:

|

||||

viewport2_options[key] = value

|

||||

|

||||

# Then assume remainder is Viewport Options

|

||||

else:

|

||||

temp_options2['multiSampleEnable'] = False

|

||||

temp_options2['multiSampleCount'] = preset[id][key]

|

||||

viewport_options[key] = value

|

||||

|

||||

if key == 'renderDepthOfField':

|

||||

temp_options2['renderDepthOfField'] = preset[id][key]

|

||||

|

||||

if key == 'ssaoEnable':

|

||||

if preset[id][key] is True:

|

||||

temp_options2['ssaoEnable'] = True

|

||||

else:

|

||||

temp_options2['ssaoEnable'] = False

|

||||

|

||||

if key == 'ssaoSamples':

|

||||

temp_options2['ssaoSamples'] = preset[id][key]

|

||||

|

||||

if key == 'ssaoAmount':

|

||||

temp_options2['ssaoAmount'] = preset[id][key]

|

||||

|

||||

if key == 'ssaoRadius':

|

||||

temp_options2['ssaoRadius'] = preset[id][key]

|

||||

|

||||

if key == 'hwFogDensity':

|

||||

temp_options2['hwFogDensity'] = preset[id][key]

|

||||

|

||||

if key == 'ssaoFilterRadius':

|

||||

temp_options2['ssaoFilterRadius'] = preset[id][key]

|

||||

|

||||

if key == 'alphaCut':

|

||||

temp_options2['transparencyAlgorithm'] = 5

|

||||

temp_options2['transparencyQuality'] = 1

|

||||

|

||||

if key == 'headsUpDisplay':

|

||||

temp_options['headsUpDisplay'] = True

|

||||

|

||||

if key == 'fogging':

|

||||

temp_options['fogging'] = preset[id][key] or False

|

||||

|

||||

if key == 'hwFogStart':

|

||||

temp_options2['hwFogStart'] = preset[id][key]

|

||||

|

||||

if key == 'hwFogEnd':

|

||||

temp_options2['hwFogEnd'] = preset[id][key]

|

||||

|

||||

if key == 'hwFogAlpha':

|

||||

temp_options2['hwFogAlpha'] = preset[id][key]

|

||||

|

||||

if key == 'hwFogFalloff':

|

||||

temp_options2['hwFogFalloff'] = int(preset[id][key])

|

||||

|

||||

if key == 'hwFogColorR':

|

||||

temp_options2['hwFogColorR'] = preset[id][key]

|

||||

|

||||

if key == 'hwFogColorG':

|

||||

temp_options2['hwFogColorG'] = preset[id][key]

|

||||

|

||||

if key == 'hwFogColorB':

|

||||

temp_options2['hwFogColorB'] = preset[id][key]

|

||||

|

||||

if key == 'motionBlurEnable':

|

||||

if preset[id][key] is True:

|

||||

temp_options2['motionBlurEnable'] = True

|

||||

else:

|

||||

temp_options2['motionBlurEnable'] = False

|

||||

|

||||

if key == 'motionBlurSampleCount':

|

||||

temp_options2['motionBlurSampleCount'] = preset[id][key]

|

||||

|

||||

if key == 'motionBlurShutterOpenFraction':

|

||||

temp_options2['motionBlurShutterOpenFraction'] = preset[id][key]

|

||||

|

||||

if key == 'lineAAEnable':

|

||||

if preset[id][key] is True:

|

||||

temp_options2['lineAAEnable'] = True

|

||||

else:

|

||||

temp_options2['lineAAEnable'] = False

|

||||

|

||||

else:

|

||||

temp_options[str(key)] = preset[id][key]

|

||||

|

||||

for key in ['override_viewport_options',

|

||||

'high_quality',

|

||||

'alphaCut',

|

||||

'gpuCacheDisplayFilter',

|

||||

'multiSample',

|

||||

'ssaoEnable',

|

||||

'ssaoSamples',

|

||||

'ssaoAmount',

|

||||

'ssaoFilterRadius',

|

||||

'ssaoRadius',

|

||||

'hwFogStart',

|

||||

'hwFogEnd',

|

||||

'hwFogAlpha',

|

||||

'hwFogFalloff',

|

||||

'hwFogColorR',

|

||||

'hwFogColorG',

|

||||

'hwFogColorB',

|

||||

'hwFogDensity',

|

||||

'textureMaxResolution',

|

||||

'motionBlurEnable',

|

||||

'motionBlurSampleCount',

|

||||

'motionBlurShutterOpenFraction',

|

||||

'lineAAEnable',

|

||||

'renderDepthOfField'

|

||||

]:

|

||||

temp_options.pop(key, None)

|

||||

|

||||

options['viewport_options'] = temp_options

|

||||

options['viewport2_options'] = temp_options2

|

||||

options['viewport_options'] = viewport_options

|

||||

options['viewport2_options'] = viewport2_options

|

||||

options['camera_options'] = camera_options

|

||||

|

||||

# use active sound track

|

||||

scene = capture.parse_active_scene()

|

||||

options['sound'] = scene['sound']

|

||||

|

||||

# options['display_options'] = temp_options

|

||||

|

||||

return options

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -80,7 +80,7 @@ IMAGE_PREFIXES = {

|

|||

"mayahardware2": "defaultRenderGlobals.imageFilePrefix"

|

||||

}

|

||||

|

||||

RENDERMAN_IMAGE_DIR = "maya/<scene>/<layer>"

|

||||

RENDERMAN_IMAGE_DIR = "<scene>/<layer>"

|

||||

|

||||

|

||||

def has_tokens(string, tokens):

|

||||

|

|

@ -260,20 +260,20 @@ class ARenderProducts:

|

|||

|

||||

"""

|

||||

try:

|

||||

file_prefix_attr = IMAGE_PREFIXES[self.renderer]

|

||||

prefix_attr = IMAGE_PREFIXES[self.renderer]

|

||||

except KeyError:

|

||||

raise UnsupportedRendererException(

|

||||

"Unsupported renderer {}".format(self.renderer)

|

||||

)

|

||||

|

||||

file_prefix = self._get_attr(file_prefix_attr)

|

||||

prefix = self._get_attr(prefix_attr)

|

||||

|

||||

if not file_prefix:

|

||||

if not prefix:

|

||||

# Fall back to scene name by default

|

||||

log.debug("Image prefix not set, using <Scene>")

|

||||

file_prefix = "<Scene>"

|

||||

|

||||

return file_prefix

|

||||

return prefix

|

||||

|

||||

def get_render_attribute(self, attribute):

|

||||

"""Get attribute from render options.

|

||||

|

|

@ -730,13 +730,16 @@ class RenderProductsVray(ARenderProducts):

|

|||

"""Get image prefix for V-Ray.

|

||||

|

||||

This overrides :func:`ARenderProducts.get_renderer_prefix()` as

|

||||

we must add `<aov>` token manually.

|

||||

we must add `<aov>` token manually. This is done only for

|

||||

non-multipart outputs, where `<aov>` token doesn't make sense.

|

||||

|

||||

See also:

|

||||

:func:`ARenderProducts.get_renderer_prefix()`

|

||||

|

||||

"""

|

||||

prefix = super(RenderProductsVray, self).get_renderer_prefix()

|

||||

if self.multipart:

|

||||

return prefix

|

||||

aov_separator = self._get_aov_separator()

|

||||

prefix = "{}{}<aov>".format(prefix, aov_separator)

|

||||

return prefix

|

||||

|

|

@ -974,15 +977,18 @@ class RenderProductsRedshift(ARenderProducts):

|

|||

"""Get image prefix for Redshift.

|

||||

|

||||

This overrides :func:`ARenderProducts.get_renderer_prefix()` as

|

||||

we must add `<aov>` token manually.

|

||||

we must add `<aov>` token manually. This is done only for

|

||||

non-multipart outputs, where `<aov>` token doesn't make sense.

|

||||

|

||||

See also:

|

||||

:func:`ARenderProducts.get_renderer_prefix()`

|

||||

|

||||

"""

|

||||

file_prefix = super(RenderProductsRedshift, self).get_renderer_prefix()

|

||||

separator = self.extract_separator(file_prefix)

|

||||

prefix = "{}{}<aov>".format(file_prefix, separator or "_")

|

||||

prefix = super(RenderProductsRedshift, self).get_renderer_prefix()

|

||||

if self.multipart:

|

||||

return prefix

|

||||

separator = self.extract_separator(prefix)

|

||||

prefix = "{}{}<aov>".format(prefix, separator or "_")

|

||||

return prefix

|

||||

|

||||

def get_render_products(self):

|

||||

|

|

|

|||

|

|

@ -29,7 +29,7 @@ class RenderSettings(object):

|

|||

_image_prefixes = {

|

||||

'vray': get_current_project_settings()["maya"]["RenderSettings"]["vray_renderer"]["image_prefix"], # noqa

|

||||

'arnold': get_current_project_settings()["maya"]["RenderSettings"]["arnold_renderer"]["image_prefix"], # noqa

|

||||

'renderman': 'maya/<Scene>/<layer>/<layer>{aov_separator}<aov>',

|

||||

'renderman': '<Scene>/<layer>/<layer>{aov_separator}<aov>',

|

||||

'redshift': get_current_project_settings()["maya"]["RenderSettings"]["redshift_renderer"]["image_prefix"] # noqa

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -12,6 +12,7 @@ class CreateAnimation(plugin.Creator):

|

|||

family = "animation"

|

||||

icon = "male"

|

||||

write_color_sets = False

|

||||

write_face_sets = False

|

||||

|

||||

def __init__(self, *args, **kwargs):

|

||||

super(CreateAnimation, self).__init__(*args, **kwargs)

|

||||

|

|

@ -24,7 +25,7 @@ class CreateAnimation(plugin.Creator):

|

|||

|

||||

# Write vertex colors with the geometry.

|

||||

self.data["writeColorSets"] = self.write_color_sets

|

||||

self.data["writeFaceSets"] = False

|

||||

self.data["writeFaceSets"] = self.write_face_sets

|

||||

|

||||

# Include only renderable visible shapes.

|

||||

# Skips locators and empty transforms

|

||||

|

|

|

|||

|

|

@ -9,13 +9,14 @@ class CreateModel(plugin.Creator):

|

|||

family = "model"

|

||||

icon = "cube"

|

||||

defaults = ["Main", "Proxy", "_MD", "_HD", "_LD"]

|

||||

|

||||

write_color_sets = False

|

||||

write_face_sets = False

|

||||

def __init__(self, *args, **kwargs):

|

||||

super(CreateModel, self).__init__(*args, **kwargs)

|

||||

|

||||

# Vertex colors with the geometry

|

||||

self.data["writeColorSets"] = False

|

||||

self.data["writeFaceSets"] = False

|

||||

self.data["writeColorSets"] = self.write_color_sets

|

||||

self.data["writeFaceSets"] = self.write_face_sets

|

||||

|

||||

# Include attributes by attribute name or prefix

|

||||

self.data["attr"] = ""

|

||||

|

|

|

|||

|

|

@ -12,6 +12,7 @@ class CreatePointCache(plugin.Creator):

|

|||

family = "pointcache"

|

||||

icon = "gears"

|

||||

write_color_sets = False

|

||||

write_face_sets = False

|

||||

|

||||

def __init__(self, *args, **kwargs):

|

||||

super(CreatePointCache, self).__init__(*args, **kwargs)

|

||||

|

|

@ -21,7 +22,8 @@ class CreatePointCache(plugin.Creator):

|

|||

|

||||

# Vertex colors with the geometry.

|

||||

self.data["writeColorSets"] = self.write_color_sets

|

||||

self.data["writeFaceSets"] = False # Vertex colors with the geometry.

|

||||

# Vertex colors with the geometry.

|

||||

self.data["writeFaceSets"] = self.write_face_sets

|

||||

self.data["renderableOnly"] = False # Only renderable visible shapes

|

||||

self.data["visibleOnly"] = False # only nodes that are visible

|

||||

self.data["includeParentHierarchy"] = False # Include parent groups

|

||||

|

|

|

|||

|

|

@ -13,22 +13,14 @@ from openpype.settings import (

|

|||

get_system_settings,

|

||||

get_project_settings,

|

||||

)

|

||||

from openpype.lib import requests_get

|

||||

from openpype.modules import ModulesManager

|

||||

from openpype.pipeline import legacy_io

|

||||

from openpype.hosts.maya.api import (

|

||||

lib,

|

||||

lib_rendersettings,

|

||||

plugin

|

||||

)

|

||||

from openpype.lib import requests_get

|

||||

from openpype.api import (

|

||||

get_system_settings,

|

||||

get_project_settings)

|

||||

from openpype.modules import ModulesManager

|

||||

from openpype.pipeline import legacy_io

|

||||

from openpype.pipeline import (

|

||||

CreatorError,

|

||||

legacy_io,

|

||||

)

|

||||

from openpype.pipeline.context_tools import get_current_project_asset

|

||||

|

||||

|

||||

class CreateRender(plugin.Creator):

|

||||

|

|

|

|||

|

|

@ -34,14 +34,15 @@ class ExtractLayout(publish.Extractor):

|

|||

for asset in cmds.sets(str(instance), query=True):

|

||||

# Find the container

|

||||

grp_name = asset.split(':')[0]

|

||||

containers = cmds.ls(f"{grp_name}*_CON")

|

||||

containers = cmds.ls("{}*_CON".format(grp_name))

|

||||

|

||||

assert len(containers) == 1, \

|

||||

f"More than one container found for {asset}"

|

||||

"More than one container found for {}".format(asset)

|

||||

|

||||

container = containers[0]

|

||||

|

||||

representation_id = cmds.getAttr(f"{container}.representation")

|

||||

representation_id = cmds.getAttr(

|

||||

"{}.representation".format(container))

|

||||

|

||||

representation = get_representation_by_id(

|

||||

project_name,

|

||||

|

|

@ -56,7 +57,8 @@ class ExtractLayout(publish.Extractor):

|

|||

|

||||

json_element = {

|

||||

"family": family,

|

||||

"instance_name": cmds.getAttr(f"{container}.name"),

|

||||

"instance_name": cmds.getAttr(

|

||||

"{}.namespace".format(container)),

|

||||

"representation": str(representation_id),

|

||||

"version": str(version_id)

|

||||

}

|

||||

|

|

|

|||

|

|

@ -77,8 +77,10 @@ class ExtractPlayblast(publish.Extractor):

|

|||

preset['height'] = asset_height

|

||||

preset['start_frame'] = start

|

||||

preset['end_frame'] = end

|

||||

camera_option = preset.get("camera_option", {})

|

||||

camera_option["depthOfField"] = cmds.getAttr(

|

||||

|

||||

# Enforce persisting camera depth of field

|

||||

camera_options = preset.setdefault("camera_options", {})

|

||||

camera_options["depthOfField"] = cmds.getAttr(

|

||||

"{0}.depthOfField".format(camera))

|

||||

|

||||

stagingdir = self.staging_dir(instance)

|

||||

|

|

|

|||

|

|

@ -1,5 +1,6 @@

|

|||

import os

|

||||

import glob

|

||||

import tempfile

|

||||

|

||||

import capture

|

||||

|

||||

|

|

@ -81,9 +82,17 @@ class ExtractThumbnail(publish.Extractor):

|

|||

elif asset_width and asset_height:

|

||||

preset['width'] = asset_width

|

||||

preset['height'] = asset_height

|

||||

stagingDir = self.staging_dir(instance)

|

||||

|

||||

# Create temp directory for thumbnail

|

||||

# - this is to avoid "override" of source file

|

||||

dst_staging = tempfile.mkdtemp(prefix="pyblish_tmp_")

|

||||

self.log.debug(

|

||||

"Create temp directory {} for thumbnail".format(dst_staging)

|

||||

)

|

||||

# Store new staging to cleanup paths

|

||||

instance.context.data["cleanupFullPaths"].append(dst_staging)

|

||||

filename = "{0}".format(instance.name)

|

||||

path = os.path.join(stagingDir, filename)

|

||||

path = os.path.join(dst_staging, filename)

|

||||

|

||||

self.log.info("Outputting images to %s" % path)

|

||||

|

||||

|

|

@ -137,7 +146,7 @@ class ExtractThumbnail(publish.Extractor):

|

|||

'name': 'thumbnail',

|

||||

'ext': 'jpg',

|

||||

'files': thumbnail,

|

||||

"stagingDir": stagingDir,

|

||||

"stagingDir": dst_staging,

|

||||

"thumbnail": True

|

||||

}

|

||||

instance.data["representations"].append(representation)

|

||||

|

|

|

|||

|

|

@ -118,7 +118,7 @@ def preview_fname(folder, scene, layer, padding, ext):

|

|||

"""

|

||||

|

||||

# Following hardcoded "<Scene>/<Scene>_<Layer>/<Layer>"

|

||||

output = "maya/{scene}/{layer}/{layer}.{number}.{ext}".format(

|

||||

output = "{scene}/{layer}/{layer}.{number}.{ext}".format(

|

||||

scene=scene,

|

||||

layer=layer,

|

||||

number="#" * padding,

|

||||

|

|

|

|||

|

|

@ -22,10 +22,10 @@ def get_redshift_image_format_labels():

|

|||

class ValidateRenderSettings(pyblish.api.InstancePlugin):

|

||||

"""Validates the global render settings

|

||||

|

||||

* File Name Prefix must start with: `maya/<Scene>`

|

||||

* File Name Prefix must start with: `<Scene>`

|

||||

all other token are customizable but sane values for Arnold are:

|

||||

|

||||

`maya/<Scene>/<RenderLayer>/<RenderLayer>_<RenderPass>`

|

||||

`<Scene>/<RenderLayer>/<RenderLayer>_<RenderPass>`

|

||||

|

||||

<Camera> token is supported also, useful for multiple renderable

|

||||

cameras per render layer.

|

||||

|

|

@ -64,12 +64,12 @@ class ValidateRenderSettings(pyblish.api.InstancePlugin):

|

|||

}

|

||||

|

||||

ImagePrefixTokens = {

|

||||

'mentalray': 'maya/<Scene>/<RenderLayer>/<RenderLayer>{aov_separator}<RenderPass>', # noqa: E501

|

||||

'arnold': 'maya/<Scene>/<RenderLayer>/<RenderLayer>{aov_separator}<RenderPass>', # noqa: E501

|

||||

'redshift': 'maya/<Scene>/<RenderLayer>/<RenderLayer>',

|

||||

'vray': 'maya/<Scene>/<Layer>/<Layer>',

|

||||

'mentalray': '<Scene>/<RenderLayer>/<RenderLayer>{aov_separator}<RenderPass>', # noqa: E501

|

||||

'arnold': '<Scene>/<RenderLayer>/<RenderLayer>{aov_separator}<RenderPass>', # noqa: E501

|

||||

'redshift': '<Scene>/<RenderLayer>/<RenderLayer>',

|

||||

'vray': '<Scene>/<Layer>/<Layer>',

|

||||

'renderman': '<layer>{aov_separator}<aov>.<f4>.<ext>',

|

||||

'mayahardware2': 'maya/<Scene>/<RenderLayer>/<RenderLayer>',

|

||||

'mayahardware2': '<Scene>/<RenderLayer>/<RenderLayer>',

|

||||

}

|

||||

|

||||

_aov_chars = {

|

||||

|

|

@ -80,7 +80,7 @@ class ValidateRenderSettings(pyblish.api.InstancePlugin):

|

|||

|

||||

redshift_AOV_prefix = "<BeautyPath>/<BeautyFile>{aov_separator}<RenderPass>" # noqa: E501

|

||||

|

||||

renderman_dir_prefix = "maya/<scene>/<layer>"

|

||||

renderman_dir_prefix = "<scene>/<layer>"

|

||||

|

||||

R_AOV_TOKEN = re.compile(

|

||||

r'%a|<aov>|<renderpass>', re.IGNORECASE)

|

||||

|

|

@ -90,8 +90,8 @@ class ValidateRenderSettings(pyblish.api.InstancePlugin):

|

|||

R_SCENE_TOKEN = re.compile(r'%s|<scene>', re.IGNORECASE)

|

||||

|

||||

DEFAULT_PADDING = 4

|

||||

VRAY_PREFIX = "maya/<Scene>/<Layer>/<Layer>"

|

||||

DEFAULT_PREFIX = "maya/<Scene>/<RenderLayer>/<RenderLayer>_<RenderPass>"

|

||||

VRAY_PREFIX = "<Scene>/<Layer>/<Layer>"

|

||||

DEFAULT_PREFIX = "<Scene>/<RenderLayer>/<RenderLayer>_<RenderPass>"

|

||||

|

||||

def process(self, instance):

|

||||

|

||||

|

|

@ -123,7 +123,6 @@ class ValidateRenderSettings(pyblish.api.InstancePlugin):

|

|||

prefix = prefix.replace(

|

||||

"{aov_separator}", instance.data.get("aovSeparator", "_"))

|

||||

|

||||

required_prefix = "maya/<scene>"

|

||||

default_prefix = cls.ImagePrefixTokens[renderer]

|

||||

|

||||

if not anim_override:

|

||||

|

|

@ -131,15 +130,6 @@ class ValidateRenderSettings(pyblish.api.InstancePlugin):

|

|||

cls.log.error("Animation needs to be enabled. Use the same "

|

||||

"frame for start and end to render single frame")

|

||||

|

||||

if renderer != "renderman" and not prefix.lower().startswith(

|

||||

required_prefix):

|

||||

invalid = True

|

||||

cls.log.error(

|

||||

("Wrong image prefix [ {} ] "

|

||||

" - doesn't start with: '{}'").format(

|

||||

prefix, required_prefix)

|

||||

)

|

||||

|

||||

if not re.search(cls.R_LAYER_TOKEN, prefix):

|

||||

invalid = True

|

||||

cls.log.error("Wrong image prefix [ {} ] - "

|

||||

|

|

|

|||

|

|

@ -1,22 +1,24 @@

|

|||

#### Basic setup

|

||||

## Basic setup

|

||||

|

||||

- Install [latest DaVinci Resolve](https://sw.blackmagicdesign.com/DaVinciResolve/v16.2.8/DaVinci_Resolve_Studio_16.2.8_Windows.zip?Key-Pair-Id=APKAJTKA3ZJMJRQITVEA&Signature=EcFuwQFKHZIBu2zDj5LTCQaQDXcKOjhZY7Fs07WGw24xdDqfwuALOyKu+EVzDX2Tik0cWDunYyV0r7hzp+mHmczp9XP4YaQXHdyhD/2BGWDgiMsiTQbNkBgbfy5MsAMFY8FHCl724Rxm8ke1foWeUVyt/Cdkil+ay+9sL72yFhaSV16sncko1jCIlCZeMkHhbzqPwyRuqLGmxmp8ey9KgBhI3wGFFPN201VMaV+RHrpX+KAfaR6p6dwo3FrPbRHK9TvMI1RA/1lJ3fVtrkDW69LImIKAWmIxgcStUxR9/taqLOD66FNiflHd1tufHv3FBa9iYQsjb3VLMPx7OCwLyg==&Expires=1608308139)

|

||||

- add absolute path to ffmpeg into openpype settings

|

||||

|

||||

- install Python 3.6 into `%LOCALAPPDATA%/Programs/Python/Python36` (only respected path by Resolve)

|

||||

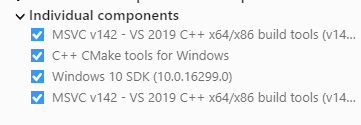

- install OpenTimelineIO for 3.6 `%LOCALAPPDATA%\Programs\Python\Python36\python.exe -m pip install git+https://github.com/PixarAnimationStudios/OpenTimelineIO.git@5aa24fbe89d615448876948fe4b4900455c9a3e8` and move built files from `%LOCALAPPDATA%/Programs/Python/Python36/Lib/site-packages/opentimelineio/cxx-libs/bin and lib` to `%LOCALAPPDATA%/Programs/Python/Python36/Lib/site-packages/opentimelineio/`. I was building it on Win10 machine with Visual Studio Community 2019 and

|

||||

- Actually supported version is up to v18

|

||||

- install Python 3.6.2 (latest tested v17) or up to 3.9.13 (latest tested on v18)

|

||||

- pip install PySide2:

|

||||

- Python 3.9.*: open terminal and go to python.exe directory, then `python -m pip install PySide2`

|

||||

- pip install OpenTimelineIO:

|

||||

- Python 3.9.*: open terminal and go to python.exe directory, then `python -m pip install OpenTimelineIO`

|

||||

- Python 3.6: open terminal and go to python.exe directory, then `python -m pip install git+https://github.com/PixarAnimationStudios/OpenTimelineIO.git@5aa24fbe89d615448876948fe4b4900455c9a3e8` and move built files from `./Lib/site-packages/opentimelineio/cxx-libs/bin and lib` to `./Lib/site-packages/opentimelineio/`. I was building it on Win10 machine with Visual Studio Community 2019 and

|

||||

with installed CMake in PATH.

|

||||

- install PySide2 for 3.6 `%LOCALAPPDATA%\Programs\Python\Python36\python.exe -m pip install PySide2`

|

||||

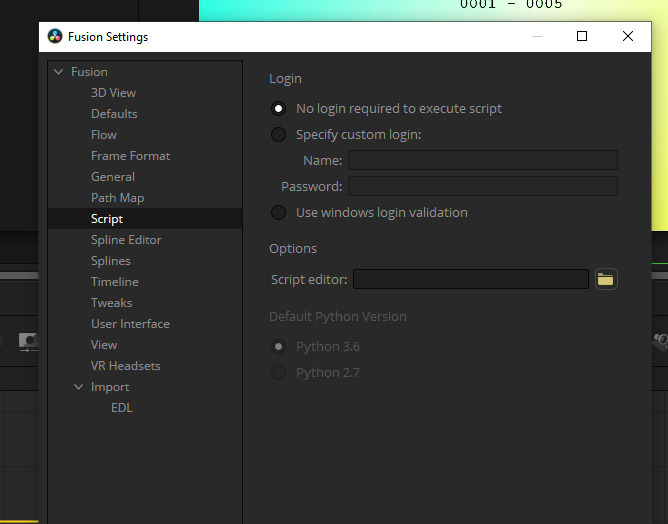

- make sure Resolve Fusion (Fusion Tab/menu/Fusion/Fusion Settings) is set to Python 3.6

|

||||

|

||||

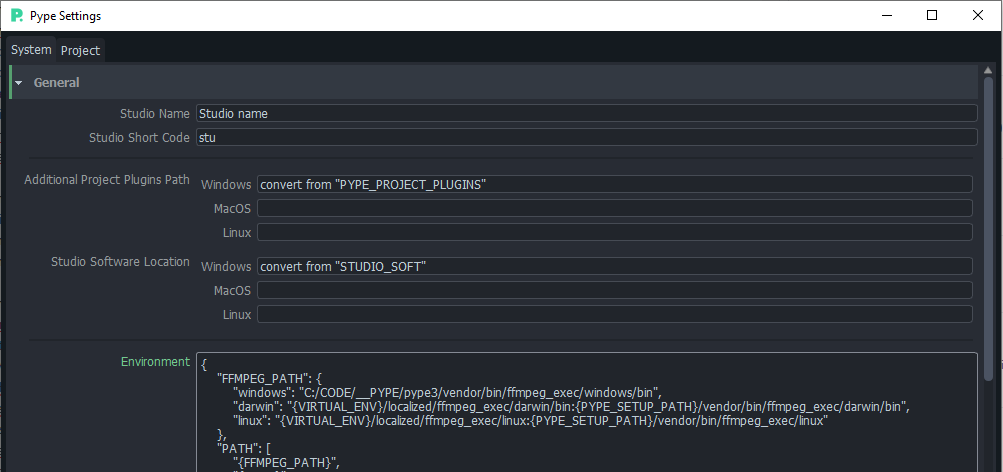

- Open OpenPype **Tray/Admin/Studio settings** > `applications/resolve/environment` and add Python3 path to `RESOLVE_PYTHON3_HOME` platform related.

|

||||

|

||||

#### Editorial setup

|

||||

## Editorial setup

|

||||

|

||||

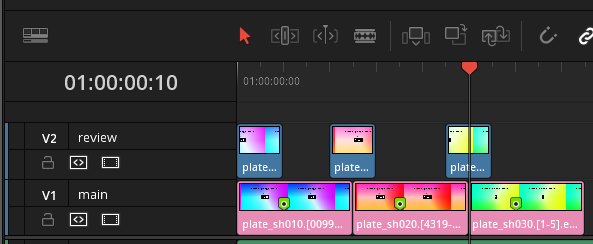

This is how it looks on my testing project timeline

|

||||

|

||||

Notice I had renamed tracks to `main` (holding metadata markers) and `review` used for generating review data with ffmpeg confersion to jpg sequence.

|

||||

|

||||

1. you need to start OpenPype menu from Resolve/EditTab/Menu/Workspace/Scripts/**__OpenPype_Menu__**

|

||||

1. you need to start OpenPype menu from Resolve/EditTab/Menu/Workspace/Scripts/Comp/**__OpenPype_Menu__**

|

||||

2. then select any clips in `main` track and change their color to `Chocolate`

|

||||

3. in OpenPype Menu select `Create`

|

||||

4. in Creator select `Create Publishable Clip [New]` (temporary name)

|

||||

|

|

|

|||

|

|

@ -1,189 +0,0 @@

|

|||

Updated as of 08 March 2019

|

||||

|

||||

--------------------------

|

||||

In this package, you will find a brief introduction to the Scripting API for DaVinci Resolve Studio. Apart from this README.txt file, this package contains folders containing the basic import modules for scripting access (DaVinciResolve.py) and some representative examples.

|

||||

|

||||

Overview

|

||||

--------

|

||||

|

||||

As with Blackmagic Design Fusion scripts, user scripts written in Lua and Python programming languages are supported. By default, scripts can be invoked from the Console window in the Fusion page, or via command line. This permission can be changed in Resolve Preferences, to be only from Console, or to be invoked from the local network. Please be aware of the security implications when allowing scripting access from outside of the Resolve application.

|

||||

|

||||

|

||||

Using a script

|

||||

--------------

|

||||

DaVinci Resolve needs to be running for a script to be invoked.

|

||||

|

||||

For a Resolve script to be executed from an external folder, the script needs to know of the API location.

|

||||

You may need to set the these environment variables to allow for your Python installation to pick up the appropriate dependencies as shown below:

|

||||

|

||||

Mac OS X:

|

||||

RESOLVE_SCRIPT_API="/Library/Application Support/Blackmagic Design/DaVinci Resolve/Developer/Scripting/"

|

||||

RESOLVE_SCRIPT_LIB="/Applications/DaVinci Resolve/DaVinci Resolve.app/Contents/Libraries/Fusion/fusionscript.so"

|

||||

PYTHONPATH="$PYTHONPATH:$RESOLVE_SCRIPT_API/Modules/"

|

||||

|

||||

Windows:

|

||||

RESOLVE_SCRIPT_API="%PROGRAMDATA%\\Blackmagic Design\\DaVinci Resolve\\Support\\Developer\\Scripting\\"

|

||||

RESOLVE_SCRIPT_LIB="C:\\Program Files\\Blackmagic Design\\DaVinci Resolve\\fusionscript.dll"

|

||||

PYTHONPATH="%PYTHONPATH%;%RESOLVE_SCRIPT_API%\\Modules\\"

|

||||

|

||||

Linux:

|

||||

RESOLVE_SCRIPT_API="/opt/resolve/Developer/Scripting/"

|

||||

RESOLVE_SCRIPT_LIB="/opt/resolve/libs/Fusion/fusionscript.so"

|

||||

PYTHONPATH="$PYTHONPATH:$RESOLVE_SCRIPT_API/Modules/"

|

||||

(Note: For standard ISO Linux installations, the path above may need to be modified to refer to /home/resolve instead of /opt/resolve)

|

||||

|

||||

As with Fusion scripts, Resolve scripts can also be invoked via the menu and the Console.

|

||||

|

||||

On startup, DaVinci Resolve scans the Utility Scripts directory and enumerates the scripts found in the Script application menu. Placing your script in this folder and invoking it from this menu is the easiest way to use scripts. The Utility Scripts folder is located in:

|

||||

Mac OS X: /Library/Application Support/Blackmagic Design/DaVinci Resolve/Fusion/Scripts/Comp/

|

||||

Windows: %APPDATA%\Blackmagic Design\DaVinci Resolve\Fusion\Scripts\Comp\

|

||||

Linux: /opt/resolve/Fusion/Scripts/Comp/ (or /home/resolve/Fusion/Scripts/Comp/ depending on installation)

|

||||

|

||||

The interactive Console window allows for an easy way to execute simple scripting commands, to query or modify properties, and to test scripts. The console accepts commands in Python 2.7, Python 3.6 and Lua and evaluates and executes them immediately. For more information on how to use the Console, please refer to the DaVinci Resolve User Manual.

|

||||

|

||||

This example Python script creates a simple project:

|

||||

#!/usr/bin/env python

|

||||

import DaVinciResolveScript as dvr_script

|

||||

resolve = dvr_script.scriptapp("Resolve")

|

||||

fusion = resolve.Fusion()

|

||||

projectManager = resolve.GetProjectManager()

|

||||

projectManager.CreateProject("Hello World")

|

||||

|

||||

The resolve object is the fundamental starting point for scripting via Resolve. As a native object, it can be inspected for further scriptable properties - using table iteration and `getmetatable` in Lua and dir, help etc in Python (among other methods). A notable scriptable object above is fusion - it allows access to all existing Fusion scripting functionality.

|

||||

|

||||

Running DaVinci Resolve in headless mode

|

||||

----------------------------------------

|

||||

|

||||

DaVinci Resolve can be launched in a headless mode without the user interface using the -nogui command line option. When DaVinci Resolve is launched using this option, the user interface is disabled. However, the various scripting APIs will continue to work as expected.

|

||||

|

||||

Basic Resolve API

|

||||

-----------------

|

||||

|

||||

Some commonly used API functions are described below (*). As with the resolve object, each object is inspectable for properties and functions.

|

||||

|

||||

|

||||

Resolve

|

||||

Fusion() --> Fusion # Returns the Fusion object. Starting point for Fusion scripts.

|

||||

GetMediaStorage() --> MediaStorage # Returns media storage object to query and act on media locations.

|

||||

GetProjectManager() --> ProjectManager # Returns project manager object for currently open database.

|

||||

OpenPage(pageName) --> None # Switches to indicated page in DaVinci Resolve. Input can be one of ("media", "edit", "fusion", "color", "fairlight", "deliver").

|

||||

ProjectManager

|

||||

CreateProject(projectName) --> Project # Creates and returns a project if projectName (text) is unique, and None if it is not.

|

||||

LoadProject(projectName) --> Project # Loads and returns the project with name = projectName (text) if there is a match found, and None if there is no matching Project.

|

||||

GetCurrentProject() --> Project # Returns the currently loaded Resolve project.

|

||||

SaveProject() --> Bool # Saves the currently loaded project with its own name. Returns True if successful.

|

||||

CreateFolder(folderName) --> Bool # Creates a folder if folderName (text) is unique.

|

||||

GetProjectsInCurrentFolder() --> [project names...] # Returns an array of project names in current folder.

|

||||

GetFoldersInCurrentFolder() --> [folder names...] # Returns an array of folder names in current folder.

|

||||

GotoRootFolder() --> Bool # Opens root folder in database.

|

||||

GotoParentFolder() --> Bool # Opens parent folder of current folder in database if current folder has parent.

|

||||

OpenFolder(folderName) --> Bool # Opens folder under given name.

|

||||

ImportProject(filePath) --> Bool # Imports a project under given file path. Returns true in case of success.

|

||||

ExportProject(projectName, filePath) --> Bool # Exports a project based on given name into provided file path. Returns true in case of success.

|

||||

RestoreProject(filePath) --> Bool # Restores a project under given backup file path. Returns true in case of success.

|

||||

Project

|

||||

GetMediaPool() --> MediaPool # Returns the Media Pool object.

|

||||

GetTimelineCount() --> int # Returns the number of timelines currently present in the project.

|

||||

GetTimelineByIndex(idx) --> Timeline # Returns timeline at the given index, 1 <= idx <= project.GetTimelineCount()

|

||||

GetCurrentTimeline() --> Timeline # Returns the currently loaded timeline.

|

||||

SetCurrentTimeline(timeline) --> Bool # Sets given timeline as current timeline for the project. Returns True if successful.

|

||||

GetName() --> string # Returns project name.

|

||||

SetName(projectName) --> Bool # Sets project name if given projectname (text) is unique.

|

||||

GetPresets() --> [presets...] # Returns a table of presets and their information.

|

||||

SetPreset(presetName) --> Bool # Sets preset by given presetName (string) into project.

|

||||

GetRenderJobs() --> [render jobs...] # Returns a table of render jobs and their information.

|

||||

GetRenderPresets() --> [presets...] # Returns a table of render presets and their information.

|

||||

StartRendering(index1, index2, ...) --> Bool # Starts rendering for given render jobs based on their indices. If no parameter is given rendering would start for all render jobs.

|

||||

StartRendering([idxs...]) --> Bool # Starts rendering for given render jobs based on their indices. If no parameter is given rendering would start for all render jobs.

|

||||

StopRendering() --> None # Stops rendering for all render jobs.

|

||||

IsRenderingInProgress() --> Bool # Returns true is rendering is in progress.

|

||||

AddRenderJob() --> Bool # Adds render job to render queue.

|

||||

DeleteRenderJobByIndex(idx) --> Bool # Deletes render job based on given job index (int).

|

||||

DeleteAllRenderJobs() --> Bool # Deletes all render jobs.

|

||||

LoadRenderPreset(presetName) --> Bool # Sets a preset as current preset for rendering if presetName (text) exists.

|

||||

SaveAsNewRenderPreset(presetName) --> Bool # Creates a new render preset by given name if presetName(text) is unique.

|

||||

SetRenderSettings([settings map]) --> Bool # Sets given settings for rendering. Settings map is a map, keys of map are: "SelectAllFrames", "MarkIn", "MarkOut", "TargetDir", "CustomName".

|

||||

GetRenderJobStatus(idx) --> [status info] # Returns job status and completion rendering percentage of the job by given job index (int).

|

||||

GetSetting(settingName) --> string # Returns setting value by given settingName (string) if the setting exist. With empty settingName the function returns a full list of settings.

|

||||

SetSetting(settingName, settingValue) --> Bool # Sets project setting base on given name (string) and value (string).

|

||||

GetRenderFormats() --> [render formats...]# Returns a list of available render formats.

|

||||

GetRenderCodecs(renderFormat) --> [render codecs...] # Returns a list of available codecs for given render format (string).

|

||||

GetCurrentRenderFormatAndCodec() --> [format, codec] # Returns currently selected render format and render codec.

|

||||

SetCurrentRenderFormatAndCodec(format, codec) --> Bool # Sets given render format (string) and render codec (string) as options for rendering.

|

||||

MediaStorage

|

||||

GetMountedVolumes() --> [paths...] # Returns an array of folder paths corresponding to mounted volumes displayed in Resolve’s Media Storage.

|

||||

GetSubFolders(folderPath) --> [paths...] # Returns an array of folder paths in the given absolute folder path.

|

||||

GetFiles(folderPath) --> [paths...] # Returns an array of media and file listings in the given absolute folder path. Note that media listings may be logically consolidated entries.

|

||||

RevealInStorage(path) --> None # Expands and displays a given file/folder path in Resolve’s Media Storage.

|

||||

AddItemsToMediaPool(item1, item2, ...) --> [clips...] # Adds specified file/folder paths from Media Store into current Media Pool folder. Input is one or more file/folder paths.

|

||||

AddItemsToMediaPool([items...]) --> [clips...] # Adds specified file/folder paths from Media Store into current Media Pool folder. Input is an array of file/folder paths.

|

||||

MediaPool

|

||||

GetRootFolder() --> Folder # Returns the root Folder of Media Pool

|

||||

AddSubFolder(folder, name) --> Folder # Adds a new subfolder under specified Folder object with the given name.

|

||||

CreateEmptyTimeline(name) --> Timeline # Adds a new timeline with given name.

|

||||

AppendToTimeline(clip1, clip2...) --> Bool # Appends specified MediaPoolItem objects in the current timeline. Returns True if successful.

|

||||

AppendToTimeline([clips]) --> Bool # Appends specified MediaPoolItem objects in the current timeline. Returns True if successful.

|

||||

CreateTimelineFromClips(name, clip1, clip2, ...)--> Timeline # Creates a new timeline with specified name, and appends the specified MediaPoolItem objects.

|

||||

CreateTimelineFromClips(name, [clips]) --> Timeline # Creates a new timeline with specified name, and appends the specified MediaPoolItem objects.

|

||||

ImportTimelineFromFile(filePath) --> Timeline # Creates timeline based on parameters within given file.

|

||||

GetCurrentFolder() --> Folder # Returns currently selected Folder.

|

||||

SetCurrentFolder(Folder) --> Bool # Sets current folder by given Folder.

|

||||

Folder

|

||||

GetClips() --> [clips...] # Returns a list of clips (items) within the folder.

|

||||

GetName() --> string # Returns user-defined name of the folder.

|

||||

GetSubFolders() --> [folders...] # Returns a list of subfolders in the folder.

|

||||

MediaPoolItem

|

||||

GetMetadata(metadataType) --> [[types],[values]] # Returns a value of metadataType. If parameter is not specified returns all set metadata parameters.

|

||||

SetMetadata(metadataType, metadataValue) --> Bool # Sets metadata by given type and value. Returns True if successful.

|

||||

GetMediaId() --> string # Returns a unique ID name related to MediaPoolItem.

|

||||

AddMarker(frameId, color, name, note, duration) --> Bool # Creates a new marker at given frameId position and with given marker information.

|

||||

GetMarkers() --> [markers...] # Returns a list of all markers and their information.

|

||||

AddFlag(color) --> Bool # Adds a flag with given color (text).

|

||||

GetFlags() --> [colors...] # Returns a list of flag colors assigned to the item.

|

||||

GetClipColor() --> string # Returns an item color as a string.

|

||||

GetClipProperty(propertyName) --> [[types],[values]] # Returns property value related to the item based on given propertyName (string). if propertyName is empty then it returns a full list of properties.

|

||||

SetClipProperty(propertyName, propertyValue) --> Bool # Sets into given propertyName (string) propertyValue (string).

|

||||

Timeline

|

||||

GetName() --> string # Returns user-defined name of the timeline.

|

||||

SetName(timelineName) --> Bool # Sets timeline name is timelineName (text) is unique.

|

||||

GetStartFrame() --> int # Returns frame number at the start of timeline.

|

||||

GetEndFrame() --> int # Returns frame number at the end of timeline.

|

||||

GetTrackCount(trackType) --> int # Returns a number of track based on specified track type ("audio", "video" or "subtitle").

|

||||

GetItemsInTrack(trackType, index) --> [items...] # Returns an array of Timeline items on the video or audio track (based on trackType) at specified index. 1 <= index <= GetTrackCount(trackType).

|

||||

AddMarker(frameId, color, name, note, duration) --> Bool # Creates a new marker at given frameId position and with given marker information.

|

||||

GetMarkers() --> [markers...] # Returns a list of all markers and their information.

|

||||

ApplyGradeFromDRX(path, gradeMode, item1, item2, ...)--> Bool # Loads a still from given file path (string) and applies grade to Timeline Items with gradeMode (int): 0 - "No keyframes", 1 - "Source Timecode aligned", 2 - "Start Frames aligned".

|

||||

ApplyGradeFromDRX(path, gradeMode, [items]) --> Bool # Loads a still from given file path (string) and applies grade to Timeline Items with gradeMode (int): 0 - "No keyframes", 1 - "Source Timecode aligned", 2 - "Start Frames aligned".

|

||||

GetCurrentTimecode() --> string # Returns a string representing a timecode for current position of the timeline, while on Cut, Edit, Color and Deliver page.

|

||||

GetCurrentVideoItem() --> item # Returns current video timeline item.

|

||||

GetCurrentClipThumbnailImage() --> [width, height, format, data] # Returns raw thumbnail image data (This image data is encoded in base 64 format and the image format is RGB 8 bit) for the current media in the Color Page in the format of dictionary (in Python) and table (in Lua). Information return are "width", "height", "format" and "data". Example is provided in 6_get_current_media_thumbnail.py in Example folder.

|

||||

TimelineItem

|

||||

GetName() --> string # Returns a name of the item.

|

||||

GetDuration() --> int # Returns a duration of item.

|

||||

GetEnd() --> int # Returns a position of end frame.

|

||||

GetFusionCompCount() --> int # Returns the number of Fusion compositions associated with the timeline item.

|

||||

GetFusionCompByIndex(compIndex) --> fusionComp # Returns Fusion composition object based on given index. 1 <= compIndex <= timelineItem.GetFusionCompCount()

|

||||

GetFusionCompNames() --> [names...] # Returns a list of Fusion composition names associated with the timeline item.

|

||||

GetFusionCompByName(compName) --> fusionComp # Returns Fusion composition object based on given name.

|

||||

GetLeftOffset() --> int # Returns a maximum extension by frame for clip from left side.

|

||||

GetRightOffset() --> int # Returns a maximum extension by frame for clip from right side.

|

||||

GetStart() --> int # Returns a position of first frame.

|

||||

AddMarker(frameId, color, name, note, duration) --> Bool # Creates a new marker at given frameId position and with given marker information.

|

||||

GetMarkers() --> [markers...] # Returns a list of all markers and their information.

|

||||

GetFlags() --> [colors...] # Returns a list of flag colors assigned to the item.

|

||||

GetClipColor() --> string # Returns an item color as a string.

|

||||

AddFusionComp() --> fusionComp # Adds a new Fusion composition associated with the timeline item.

|

||||

ImportFusionComp(path) --> fusionComp # Imports Fusion composition from given file path by creating and adding a new composition for the item.

|

||||

ExportFusionComp(path, compIndex) --> Bool # Exports Fusion composition based on given index into provided file name path.

|

||||

DeleteFusionCompByName(compName) --> Bool # Deletes Fusion composition by provided name.

|

||||

LoadFusionCompByName(compName) --> fusionComp # Loads Fusion composition by provided name and sets it as active composition.

|

||||

RenameFusionCompByName(oldName, newName) --> Bool # Renames Fusion composition by provided name with new given name.

|

||||

AddVersion(versionName, versionType) --> Bool # Adds a new Version associated with the timeline item. versionType: 0 - local, 1 - remote.

|

||||

DeleteVersionByName(versionName, versionType) --> Bool # Deletes Version by provided name. versionType: 0 - local, 1 - remote.

|

||||

LoadVersionByName(versionName, versionType) --> Bool # Loads Version by provided name and sets it as active Version. versionType: 0 - local, 1 - remote.

|

||||

RenameVersionByName(oldName, newName, versionType)--> Bool # Renames Version by provided name with new given name. versionType: 0 - local, 1 - remote.

|

||||

GetMediaPoolItem() --> MediaPoolItem # Returns a corresponding to the timeline item media pool item if it exists.

|

||||

GetVersionNames(versionType) --> [strings...] # Returns a list of version names by provided versionType: 0 - local, 1 - remote.

|

||||

GetStereoConvergenceValues() --> [offset, value] # Returns a table of keyframe offsets and respective convergence values

|

||||

GetStereoLeftFloatingWindowParams() --> [offset, value] # For the LEFT eye -> returns a table of keyframe offsets and respective floating window params. Value at particular offset includes the left, right, top and bottom floating window values

|

||||

GetStereoRightFloatingWindowParams() --> [offset, value] # For the RIGHT eye -> returns a table of keyframe offsets and respective floating window params. Value at particular offset includes the left, right, top and bottom floating window values

|

||||

|

|

@ -1,5 +1,5 @@

|

|||

Updated as of 20 October 2020

|

||||

-----------------------------

|

||||

Updated as of 9 May 2022

|

||||

----------------------------

|

||||

In this package, you will find a brief introduction to the Scripting API for DaVinci Resolve Studio. Apart from this README.txt file, this package contains folders containing the basic import

|

||||

modules for scripting access (DaVinciResolve.py) and some representative examples.

|

||||

|

||||

|

|

@ -89,12 +89,25 @@ Resolve

|

|||

Fusion() --> Fusion # Returns the Fusion object. Starting point for Fusion scripts.

|

||||

GetMediaStorage() --> MediaStorage # Returns the media storage object to query and act on media locations.

|

||||

GetProjectManager() --> ProjectManager # Returns the project manager object for currently open database.

|

||||